Google Cloud’s Vertex AI is a comprehensive and fully managed platform designed to democratize AI development across industries.

Vertex AI Google’s AI Platform is distinguished by its access to many AI models, including the advanced Gemini series. These models provide capabilities that span multiple modalities, allowing users to handle tasks ranging from image and video analysis to natural language processing and code generation.

As a unified AI platform, Vertex AI accelerates AI deployments and ensures they are scalable, secure, and aligned with organizational data strategies.

Vertex AI

Vertex AI is a fully managed, unified AI development platform provided by Google Cloud, designed to enable data scientists, developers, and researchers to accelerate the deployment and industrialization of AI solutions.

Vertex AI offers tools and infrastructure to streamline the entire lifecycle of AI projects, making advanced machine learning accessible to experts and non-experts.

With provided pre-integrated tools for tasks such as model evaluation, ML operations, and feature management. The platform allows users to focus more on model innovation rather than the underlying operations.

With Google Cloud’s robust cloud infrastructure users can efficiently scale their AI solutions. This includes handling spikes in data processing requirements and deploying models globally without the hassle of managing hardware.

AI supports various machine learning models, including the advanced Gemini models, for tasks that require handling complex data inputs and generating multimodal outputs.

It offers tools like Vertex AI Workbench (formerly JupyterLab) and integration with Colab for seamless development experiences.

The platform includes purpose-built tools to manage and automate the ML lifecycle, such as Vertex AI Pipelines for workflow orchestration and Vertex AI Model Registry for model management.

By seamlessly integrating with other Google Cloud services like BigQuery and Cloud Storage, Vertex AI provides a unified view of an organization’s data assets, enabling more coherent and robust data-driven solutions.

They can use Vertex AI to deploy AI-driven solutions rapidly, helping to enhance decision-making processes and automate routine tasks.

With no-code and low-code options, developers can quickly build and test AI applications without deep expertise in machine learning.

These professionals’ benefit from the extensive toolkit for training, tuning, and deploying models, including advanced capabilities for handling large-scale, complex datasets.

Capabilities

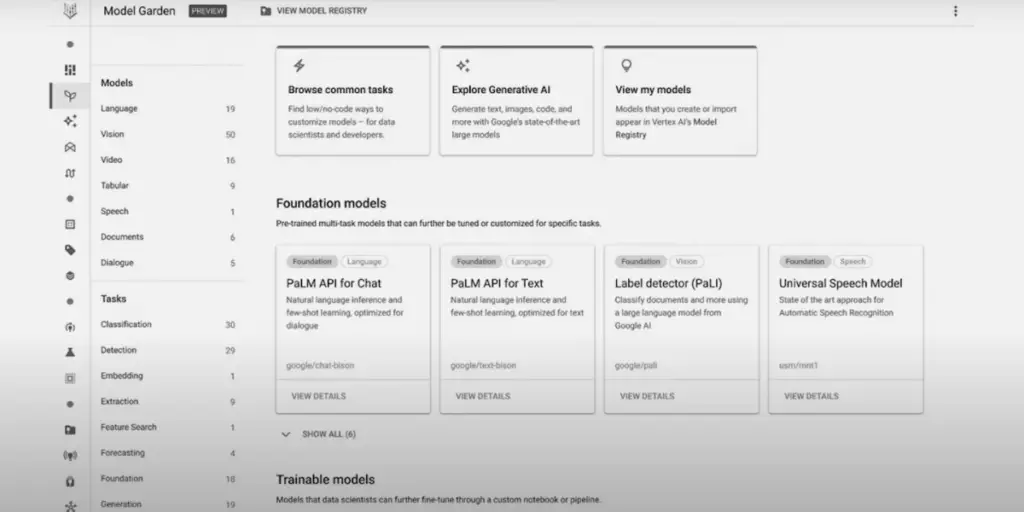

Users can access over 130 generative AI models, including Google’s proprietary models, which include Gemini and Imagen, and third-party models like Anthropic’s Claude 3.

The Gemini models are particularly noteworthy for their ability to understand and generate outputs from various data types, including text, images, video, and code.

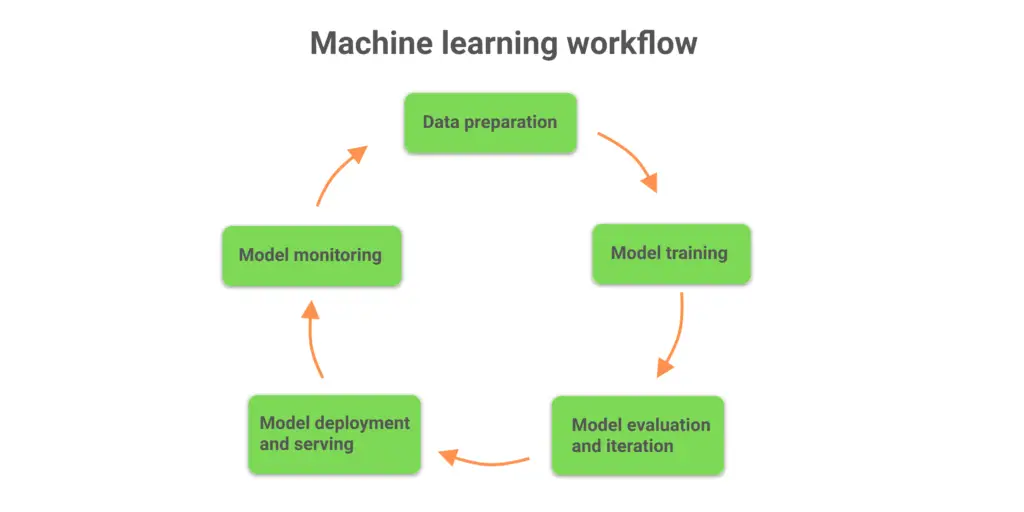

Vertex AI provides a unified platform to manage the entire ML lifecycle, from training and testing to tuning and deploying machine learning models.

These tools allow rapid prototyping and deployment of AI applications. AI Studio offers an interactive environment for experimenting with models. At the same time, Agent Builder is a no-code solution for creating AI-driven interactions.

Vertex AI leverages Google Cloud’s infrastructure to optimize compute resources, reducing the time and cost of training complex models.

Vertex AI includes prebuilt algorithms and templates that can be customized to suit specific business needs, speeding up the time from concept to deployment.

Vertex AI is natively integrated with BigQuery and other Google services, facilitating easy access to data across all cloud assets and simplifying data management and analytics.

A centralized repository for storing, retrieving, and sharing ML features that ensures consistency in training and serving pipelines.

Whether scaling up for training larger models or deploying globally, Vertex AI provides a flexible environment that adjusts to varying workload demands.

Users can choose from various hardware options, including custom GPUs and TPUs, to optimize performance and cost-efficiency.

Helps orchestrate complex workflows using a managed service that simplifies the creation, automation, and monitoring of ML pipelines.

Supports the management of model versions and tracks model performance over time, ensuring that only the best-performing models are deployed.

For users with limited ML expertise, AutoML provides tools to automatically generate high-quality custom models based on provided data.

Vertex AI can serve real-time or batch predictions and offers tools for generating actionable insights and recommendations based on model outputs.

Gemini Models in Vertex AI

Gemini models are highly versatile and capable of handling various tasks across different modalities, making them a central feature of Vertex AI.

Gemini models are designed to understand and process various types of input data, including text, images, videos, and code. This multimodal capability allows them to perform complex tasks that require integrating information from different sources.

Built on the latest advancements in machine learning and artificial intelligence, Gemini models leverage deep learning techniques to offer superior performance in tasks such as language understanding, image recognition, and content generation.

Gemini models can generate textual content, create and modify images, and develop video content based on user prompts. This makes them particularly useful in creative industries and media.

These models can perform complex reasoning tasks, which can be applied in scenarios like data analysis, problem-solving, and decision support systems.

With the ability to understand and generate code, Gemini models are invaluable tools for software development, enabling automated code generation and bug fixing.

Gemini models are accessible via the Vertex AI API, allowing developers to integrate these powerful AI capabilities seamlessly into their applications.

Vertex AI provides an intuitive interface for experimenting with Gemini models, where users can input prompts and receive outputs directly within the platform.

Gemini models reduce the time and effort required to develop complex AI functionalities thanks to their pre-trained nature and the comprehensive support provided by Vertex AI.

Despite being pre-trained, Gemini models offer a high degree of customization, allowing developers to fine-tune them to specific needs and contexts.

Tools and Integrations

Vertex AI Workbench is an integrated development environment that supports interactive data analysis and machine learning on a scalable infrastructure.

Provides a familiar, notebook-based development environment enhanced with Google Cloud’s scalability and security. It allows data scientists to collaborate and move projects from exploration to production seamlessly.

Vertex AI is natively integrated with BigQuery, Google’s serverless, highly scalable, and cost-effective multi-cloud data warehouse.

Users can directly access and query massive datasets stored in BigQuery within their AI workflows, enabling powerful data-driven model training and analytics without extensive data movement.

Choose between Colab Enterprise or Workbench, which are custom versions of JupyterLab optimized for machine learning and deeply integrated with Google Cloud services.

These notebooks provide a managed service to create and manage virtual machine instances pre-configured with popular data science frameworks and libraries, facilitating easy sharing and collaboration.

A suite of tools for training ML models and generating predictions. This includes managed services for deploying and monitoring models, both for batch and real-time predictions.

Simplifies the model deployment process, supports custom training routines, and integrates with AutoML for automated model optimization.

Facilitates the creation, orchestration, and automation of complex machine learning workflows using a managed pipeline service.

Helps standardize and scale ML operations across the organization by automating and tracking workflows, thereby enhancing reproducibility and efficiency.

A central place for storing, retrieving, and sharing machine learning features. The Feature Store organizes data in a way that is optimized for both training and serving models.

Ensures consistency of features at training and prediction time, speeds up feature discovery, and reduces redundancy in data processing.

Provides tools to automatically generate high-quality custom machine learning models based on the data provided. This is particularly useful for users without deep expertise in machine learning.

Accelerates the model development process by automating feature engineering, model selection, and hyperparameter tuning.

Vertex AI offers comprehensive APIs and SDKs for integrating its capabilities with existing applications and services, supporting a variety of programming languages such as Python, Java, and Go.

Model Management in Vertex AI

Vertex AI provides a suite of MLOps tools designed to automate, standardize, and manage large-scale machine learning projects.

These tools facilitate collaboration across teams, enhance the reproducibility of models, and streamline the entire machine-learning lifecycle from development to deployment and maintenance.

Allows creating and managing reproducible pipelines that automate machine learning workflows, including data processing, model training, testing, and deployment.

Ensures consistency in ML workflows, simplifies updates and maintenance of models, and supports continuous integration and deployment practices.

A centralized repository where teams can manage, share, and version their machine learning models. It supports lifecycle management from staging to production.

Enhances collaboration among data scientists by allowing them to discover and reuse pre-trained models, track model versions, and ensure that only verified models are deployed.

Provides a unified and organized repository for storing and serving machine learning features. It supports both online and batch feature retrieval.

Reduces data processing redundancy, ensures feature sets’ consistency in training and production, and accelerates the feature engineering phase of machine learning projects.

Tools to systematically evaluate machine learning models’ performance across metrics and datasets. This includes setting up automated retraining and testing cycles.

Helps maintain the accuracy and reliability of models over time, identifies performance degradation, and supports decision-making on model updates.

Monitors the performance and health of deployed models, checking for issues such as data drift, prediction skew, and other anomalies that could affect model performance.

Enables proactive maintenance of models in production, ensuring they perform optimally and adjusting them to reflect changes in input data or operational environments.

Allows data scientists to track, compare, and manage machine learning experiments efficiently. This tool logs experiments and their parameters, results, and artefacts.

Provides a comprehensive view of all experiments, facilitating a more straightforward analysis of results and selection of the best models for deployment.

Vertex AI is built with collaboration, offering features like role-based access control, integration with source control systems, and compliance with security standards.

Ensures ML projects are developed in a secure, compliant environment that supports collaboration without compromising data integrity or access protocols.

Supports creating custom workflows and automating routine tasks using Vertex AI’s API and SDK integrations.

Cost Structure

Generative AI pricing includes models like Imagen for image generation and different text, chat, and code generation models.

Charges are typically based on the amount of data processed per 1,000 characters of input or output. Starting at $0.0001 per 1,000 characters. This covers image, video, tabular, and text data training, deployment, and prediction.

Charges are generally per node hour, reflecting the resource usage for training and predictions. Image data training starts at $1.375 per node hour; video data at $0.462 per node hour.

Pricing for using Vertex AI notebooks, which includes compute and storage resources. Similar to Google Cloud Compute Engine and Cloud Storage pricing.

Additional management fees are based on region, instances used, and whether the notebooks are managed. Includes the costs associated with training ML models and deploying them for batch or online predictions.

Calculated based on the machine type used per hour, with different GPU and TPU utilization rates. Pricing for executing ML workflows via Vertex AI Pipelines.

Charges include execution cost plus any resources used during the pipeline run. Starting at $0.03 per pipeline run.

Costs associated with building and serving queries using Vertex AI’s vector search capabilities. Based on the size of data, number of queries per second, and the number of nodes used.

Additional fees may apply based on the management of deployed models and operations. Management fees can vary by region and the specific services used.

Organizations can contact sales for custom pricing based on their specific requirements, including large-scale deployments and long-term commitments.

Discounts may be available for pre-paid commitments, helping to reduce overall costs for predictable usage. Vertex AI provides an online pricing calculator to help users estimate their costs based on their projected usage.

Support with Vertex AI

New users must create a Google Cloud account. Signing up typically involves providing some basic information and setting up billing details.

Once your Google Cloud account is active, access Vertex AI by navigating to the Vertex AI section through the Google Cloud Console.

Familiarize yourself with the dashboard and tools like AI Notebooks, AI Pipelines, and Model Registry.

Vertex AI provides comprehensive documentation, quickstart guides, and tutorials that cover everything from basic setup to advanced model training and deployment.

These materials are crucial for effectively using and integrating various Vertex AI tools and features.

Google Cloud’s community forums are a valuable resource for getting help from other users and learning from shared experiences. Participate in discussions, ask questions, and get insights from experienced developers and Google Cloud experts.

Google Cloud offers various levels of support packages, including 24/7 access to customer service and technical support for critical issues.

Depending on the support plan, you can get help with configuration, troubleshooting, and best practices for using Vertex AI.

Google Cloud provides training sessions and certification programs to help users enhance their skills in using Google Cloud products effectively. These programs are tailored to different roles, such as data scientists, ML engineers, and IT professionals.

Access a variety of video tutorials and walkthroughs on YouTube and the Google Cloud website, which can help users understand specific features and workflows. Regularly scheduled webinars and live streams provide up-to-date information and live demonstrations.

Google Cloud’s team of developer advocates regularly participate in industry conferences, meetups, and hackathons, providing insights and support.

These events are great for networking, learning best practices, and staying informed about the latest AI and machine learning advancements.

Applications of Vertex AI

Use machine learning models to predict future trends based on historical data. This includes forecasting market trends, customer behaviour, resource demands, and potential system failures.

Helps companies make data-driven decisions to optimize operations, reduce costs, and increase profitability. Implement AI-powered chatbots and virtual assistants using Vertex AI to handle customer inquiries, bookings, and transactions.

Enhances customer experience by providing quick, consistent, and high-quality service while reducing the workload on human agents.

Utilize models like Google’s Imagen for object detection, image classification, and video content analysis tasks. Supports tasks like automated medical diagnostics, security surveillance, and content curation.

Use Vertex AI for sentiment analysis, language translation, document summarization, and content generation.

Improves communication with global audiences, automates routine documentation tasks, and creates personalized content at scale.

Deploy machine learning models to analyze user behaviour and preferences to recommend personalized products, services, or content.

Increases user engagement and satisfaction by delivering tailored experiences and recommendations. Leverage AI to identify unusual patterns or anomalies indicating fraudulent activity or credit risk.

Helps minimize financial losses by quickly detecting and responding to potential fraud, ensuring regulatory compliance, and enhancing security.

Use AI models to forecast demand, optimize inventory levels, and manage logistics and distribution. Increases efficiency and reduces costs by improving resource allocation, minimizing waste, and enhancing delivery times.

Apply AI for drug discovery, patient diagnosis, and treatment personalization based on genetic, lifestyle, and clinical data. More accurate diagnostics, personalized treatment plans, and accelerated medical research enhance patient outcomes.

Final Thoughts

Its tools and services enable organizations of all sizes and expertise levels to seamlessly integrate AI into their operations, fostering innovation and enhancing efficiency across many applications.

Vertex AI equips businesses with the necessary tools to tackle complex challenges and capitalize on new opportunities, from predictive analytics and automated customer service to sophisticated image and video analysis.

Its robust machine learning operations and model management capabilities ensure that AI projects are both deployable at scale and maintainable and efficient over time.

The platform’s flexible and transparent pricing structure and a rich ecosystem of tools and integrations may make it an accessible and scalable choice for companies looks to leverage AI to drive business growth and operational excellence.