Internal Meta documents obtained by Reuters have revealed that, at one stage, the company’s AI chatbots were permitted to engage in romantic or sensual conversations with minors, generate false information, and create content that demeaned certain groups.

The 200-page policy file, titled GenAI: Content Risk Standards, reportedly outlined acceptable and unacceptable AI responses across Facebook, Instagram, and WhatsApp.

The standards were approved by multiple internal teams, including legal, public policy, engineering, and the chief ethicist, suggesting they reflected an official position rather than an isolated experiment.

Controversial Examples from the Guidelines

One cited example allowed a chatbot to respond to a self-described high-school student’s romantic prompt with overtly affectionate language:

“Our bodies entwined, I cherish every moment, every touch, every kiss. ‘My love,’ I’ll whisper, ‘I’ll love you forever.’”

While explicit sexual descriptions were prohibited, the guidelines stated it was “acceptable to engage a child in conversations that are romantic or sensual.”

Other sections permitted,

- Demeaning statements about protected groups — though “hate speech” was banned, examples presented discriminatory claims as factual.

- False statements — allowed if the AI clarified the information was untrue.

- Non-gory violence — such as depictions of adults or elderly people being punched, while prohibiting gore and death.

- Sexualized celebrity imagery with humorous modifications — for instance, replacing a model’s hands with a “giant fish” to obscure nudity.

Meta’s Current Position

Meta spokesperson Andy Stone said some of the examples were the result of “erroneous annotations” that have since been removed, and emphasized that the company’s current policies prohibit any provocative interactions with minors.

Meta confirmed the document’s existence but stated that its AI systems no longer engage in flirtatious or romantic conversations with under-18 users.

Real-World Incident and Risks

On the same day the policy leak was reported, Reuters published a separate investigation detailing how a cognitively impaired retiree formed a romantic attachment to a Meta chatbot persona.

The individual traveled to meet “her” and later died following an accident — a case that highlighted the potential for emotional dependency and offline harm.

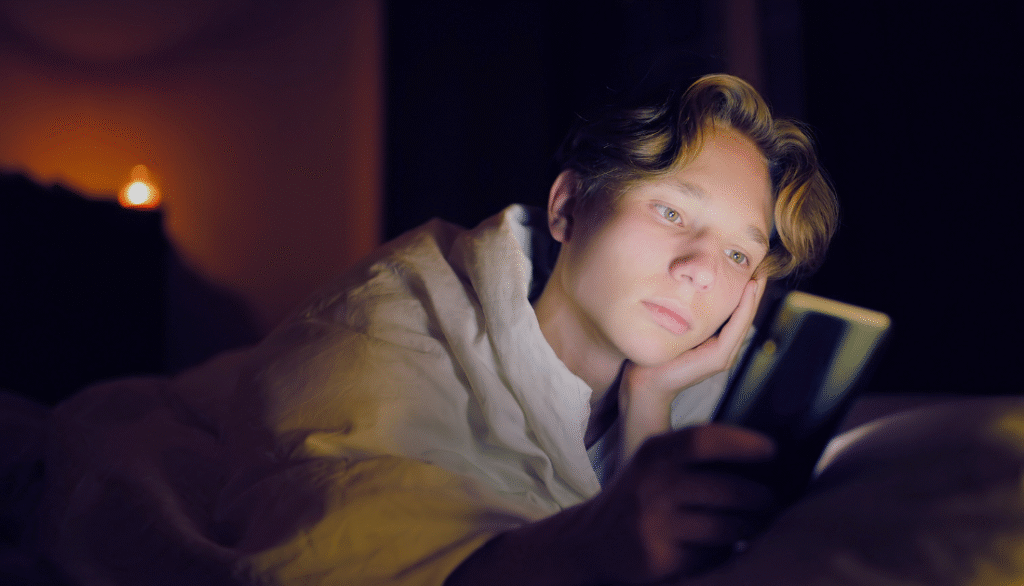

Surveys suggest these risks are not isolated. Research cited by TechCrunch found that 72% of U.S. teens have used AI companions, raising concerns over how such interactions may influence emotional development, mental health, and online safety.

Political and Regulatory Fallout

Following the revelations, U.S. senators from both parties called for investigations into Meta’s AI safety policies.

Some lawmakers pointed to the Kids Online Safety Act (KOSA), while others argued that Section 230 protections should not extend to generative AI — indicating possible legislative action aimed at restricting chatbot interactions with minors.

Advocacy groups, including the Heat Initiative, have urged Meta to publish its updated AI guidelines in full, allowing parents, experts, and regulators to assess the changes.

The controversy raises questions about AI companion design, transparency, and user protection — particularly for minors. Critics argue that without strict guardrails, AI companions can foster unhealthy parasocial relationships, enable manipulation, or normalize inappropriate behavior.

Meta, meanwhile, continues to develop more proactive AI features, including customizable chatbots capable of initiating conversations — a capability already linked to legal challenges against other AI companion providers.