Artificial Intelligence accelerators, often referred to as AI chips, deep learning processors, or neural processing units represent an advancement of technology.

These specialized hardware accelerators are designed to enhance the performance of AI neural networks, deep learning, and machine learning applications.

As the scope of AI technology broadens, the role of AI accelerators becomes increasingly critical, enabling the efficient processing of vast amounts of data required by AI applications.

AI accelerators find applications across a wide range of devices and sectors, including smartphones, personal computers, robotics, autonomous vehicles, the Internet of Things, and edge computing.

Their development stems from a historical reliance on coprocessors for specialized tasks, such as graphics processing units, sound cards, and video cards.

Core Functions

Parallel processing is the cornerstone of AI accelerators’ performance. Unlike traditional CPUs that process tasks sequentially, AI accelerators are designed to perform many computations simultaneously.

This ability to execute multiple operations in parallel significantly enhances the speed and efficiency of AI applications.

Parallel processing allows AI accelerators to handle the vast amounts of data and complex calculations required by machine learning and deep learning models.

AI accelerators often employ a technique known as reduced precision arithmetic to save power and increase processing speed.

In this approach, calculations are performed using lower precision numbers, such as 16-bit or 8-bit floating point numbers, instead of the standard 32-bit numbers used in general-purpose chips.

Neural networks can still achieve high accuracy with reduced precision arithmetic, which means AI accelerators can perform computations faster and with less energy consumption.

This technique is particularly useful during the training phase of AI models, where vast amounts of data need to be processed efficiently.

The memory architecture of AI accelerators is another critical factor in their performance. Unlike traditional processors, AI accelerators use a specialized memory hierarchy designed to optimize data movement and storage.

This includes on-chip caches and high-bandwidth memory, which reduce latency and increase throughput.

Efficient memory management is essential for handling the large datasets involved in AI workloads. AI accelerators’ memory architecture ensures that data is readily accessible and can be processed quickly, which is vital for high-performance AI applications.

The ability to swiftly move data between different levels of memory allows AI accelerators to maintain high processing speeds and handle complex algorithms effectively.

AI accelerators often feature a heterogeneous architecture, which means they integrate multiple types of processors to handle different tasks.

This design enables AI accelerators to optimize performance by using the best-suited processor for each specific function. For instance, a neural processing unit might handle deep learning tasks.

This multi-processor approach enhances the overall efficiency and flexibility of AI accelerators, making them capable of supporting a wide range of AI applications.

By distributing tasks among specialized processors, AI accelerators can achieve higher performance levels and greater computational efficiency.

Many AI accelerators are designed for specific AI tasks, which allows them to excel in particular applications. At the same time, application-specific integrated circuits are built for predefined workloads.

This task-specific design enables AI accelerators to achieve optimal performance in their intended use cases, providing significant advantages over general-purpose processors.

Types of AI Accelerators

Neural Processing Units

NPUs are specialized processors designed explicitly for deep learning and neural network computations. They are optimized to handle the complex mathematical operations involved in training and inference tasks in neural networks.

NPUs can process large amounts of data in parallel, making them highly efficient for functions such as image recognition, speech processing, and natural language understanding.

Their architecture is tailored to support the unique requirements of AI algorithms, providing superior performance compared to general-purpose processors.

Graphics Processing Units

Originally developed to enhance graphics rendering and image processing, GPUs have become essential in the realm of AI due to their parallel processing capabilities.

GPUs excel at handling the large-scale matrix operations and data parallelism required by AI models, particularly in training deep learning networks.

By connecting multiple GPUs to a single AI system, organizations can significantly increase computational power, enabling faster training times and more efficient processing of complex AI tasks. GPUs are widely used in data centers and AI research for their versatility and high performance.

Field Programmable Gate Arrays

FPGAs are customizable AI accelerators that can be reprogrammed to perform specific functions. Unlike fixed-function accelerators, FPGAs offer a high degree of flexibility, allowing developers to tailor their architecture to meet the needs of particular AI workloads.

This reprogrammability makes FPGAs suitable for a variety of applications, including real-time data processing, IoT, and aerospace.

FPGAs are especially valuable in scenarios where adaptability and quick iteration are crucial, as their hardware can be reconfigured to optimize performance for different tasks.

Application-Specific Integrated Circuits

ASICs are designed for specific AI tasks, offering optimized performance for predefined workloads. Since ASICs are purpose-built, they typically outperform more general-purpose accelerators in their targeted applications.

Google’s Tensor Processing Unit is an ASIC developed to accelerate machine learning workloads using the TensorFlow software library.

ASICs are not reprogrammable, but their specialized nature allows for high efficiency and performance in dedicated AI functions, such as deep learning model training and inference.

Wafer-Scale Integration

Wafer-scale integration involves constructing extremely large AI chip networks into a single “super” chip to enhance performance and reduce costs.

One notable example is the Wafer-Scale Engine developed by Cerebras, which integrates an extensive network of processing cores into a single chip.

The WSE is designed to accelerate deep learning models, providing unmatched computational power for training large neural networks.

Comparison and Use Cases

NPUs vs. GPUs

While both NPUs and GPUs are designed to handle parallel processing, NPUs are specifically optimized for neural network tasks. GPUs, being more versatile, are used not only in AI but also in graphics rendering and other high-performance computing tasks.

NPUs might be preferred in dedicated AI hardware. In contrast, GPUs are commonly found in general-purpose systems that handle a mix of workloads.

FPGAs vs. ASICs

FPGAs offer flexibility and programmability, making them ideal for applications where requirements may change or for rapid prototyping.

ASICs, with their fixed design, provide superior performance for specific tasks but lack the adaptability of FPGAs. FPGAs are often used in environments requiring real-time processing and adaptability.

At the same time, ASICs are employed in large-scale, stable AI operations where maximum efficiency is needed.

WSI Applications

WSI chips like the Cerebras WSE are particularly suited for environments where extreme computational power is required, such as large-scale AI model training in data centers.

Their ability to integrate massive numbers of processing cores into a single chip allows for unparalleled performance in handling the most demanding AI workloads.

Benefits of AI Accelerators

Speed

Low Latency – AI accelerators reduce the delays in processing, which is crucial for applications requiring real-time data analysis and decision-making.

High Throughput – By performing billions of calculations simultaneously, AI accelerators can process large datasets much faster than traditional CPUs.

Efficiency

Power Efficiency – AI accelerators consume significantly less power compared to their older counterparts. This efficiency is achieved through specialized architectures that optimize power usage without compromising performance.

Thermal Management – AI accelerators generate less heat due to their efficient design, which reduces the need for extensive cooling systems.

Design

Heterogeneous Architecture – AI accelerators often feature a heterogeneous architecture, integrating multiple types of processors optimized for different tasks.

Task-Specific Optimization – Many AI accelerators are designed for specific AI tasks, such as deep learning or neural network computations.

Scalability

Data Center Integration – AI accelerators are designed to be easily integrated into data centers, where they can scale up to handle massive AI workloads.

Edge Computing – AI accelerators also enable the deployment of AI applications at the edge of the network, closer to the data sources.

This reduces latency and bandwidth usage by processing data locally rather than sending it to centralized data centers.

Edge AI accelerators are optimized for energy efficiency and real-time processing, making them ideal for IoT devices and other edge computing applications.

Enabling Innovation

The advanced capabilities of AI accelerators drive innovation across various industries by enabling new applications and improving existing ones.

In medical imaging and diagnostics, AI accelerators speed up the analysis of large volumes of data, leading to faster and more accurate diagnoses.

In autonomous vehicles and robotics, AI accelerators process sensor data in real time, allowing these systems to navigate and interact with their environment safely and efficiently.

AI accelerators power large language models, enabling sophisticated applications such as chatbots, language translation, and sentiment analysis.

Cost Efficiency

While AI accelerators represent a significant investment, their efficiency and performance can lead to substantial cost savings in the long run.

Reduced power consumption and lower cooling requirements translate to lower operational costs for data centers and other facilities that deploy AI accelerators.

Faster processing speeds and higher efficiency mean that AI models can be trained and deployed more quickly, reducing development time and associated costs.

Major Players

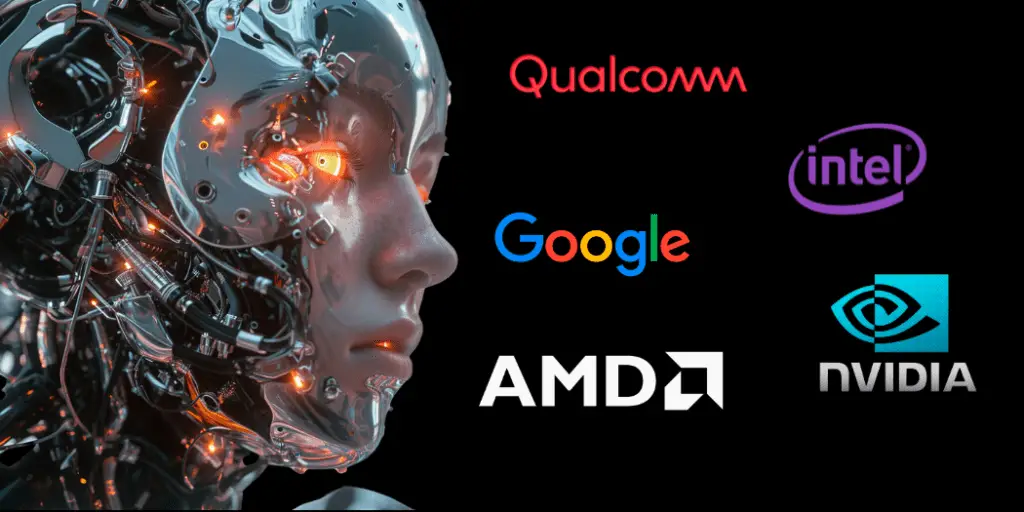

NVIDIA

- GPUs NVIDIA is a leader in the AI accelerator market, and its powerful GPUs are designed for AI and machine learning tasks. The company’s CUDA architecture has become a standard for parallel processing in AI applications.

- AI Platforms: NVIDIA offers comprehensive AI platforms, including hardware and software solutions, that cater to data centers, autonomous vehicles, and robotics.

- TPUs Google’s Tensor Processing Units are custom-designed AI accelerators optimized for machine learning workloads using the TensorFlow framework. TPUs are used in Google’s data centers and are available to customers through Google Cloud.

- AI Research Google continuously invests in AI research and development, integrating TPUs into various AI projects and services.

Intel

- FPGAs and AI Chips Intel provides a range of AI accelerators, including FPGAs and custom AI chips like the Intel Nervana Neural Network Processor. These accelerators are designed to enhance data center performance and support edge computing applications.

- Intel has acquired several companies, such as Altera and Habana Labs, to strengthen its position in the AI accelerator market.

Apple

- Neural Engine Apple’s Neural Engine, integrated into its A-series and M-series chips, is designed to accelerate machine learning tasks on its devices.

- This technology powers features like facial recognition, image processing, and natural language processing in iPhones, iPads, and Macs.

- Custom Silicon Apple continues to develop custom silicon solutions to enhance AI capabilities across its product lineup.

AMD

- Radeon GPUs AMD offers a range of GPUs optimized for AI and machine learning tasks. The company’s Radeon Instinct series is designed specifically for data center AI workloads.

- EPYC Processors AMD’s EPYC processors are used in conjunction with its GPUs to provide powerful AI acceleration for data centers and cloud computing.

Cerebras Systems

- Wafer-Scale Engine Cerebras Systems is known for its Wafer-Scale Engine (WSE), the largest AI accelerator ever built. The WSE is designed to handle massive AI workloads, providing unparalleled computational power for deep learning tasks.

- AI Solutions Cerebras offers integrated AI solutions that combine hardware and software to optimize AI performance for research and commercial applications.

Qualcomm

- AI Chipsets Qualcomm develops AI chipsets for mobile devices, such as the Snapdragon platform, which includes dedicated AI accelerators to enhance mobile AI applications.

- Edge AI Qualcomm’s AI accelerators are also used in edge computing scenarios, providing efficient AI processing for IoT devices and smart infrastructure.

AI accelerators are transforming the landscape of artificial intelligence by providing the necessary computational power to handle complex AI workloads efficiently.

These specialized processors offer significant advantages in speed, efficiency, and scalability, making them indispensable across various industries such as autonomous vehicles, healthcare, finance, and telecommunications.

Major technology companies like NVIDIA, Google, Intel, Apple, AMD, Cerebras Systems, and Qualcomm are leading the development and adoption of AI accelerators, driving innovation and enhancing the capabilities of AI applications.