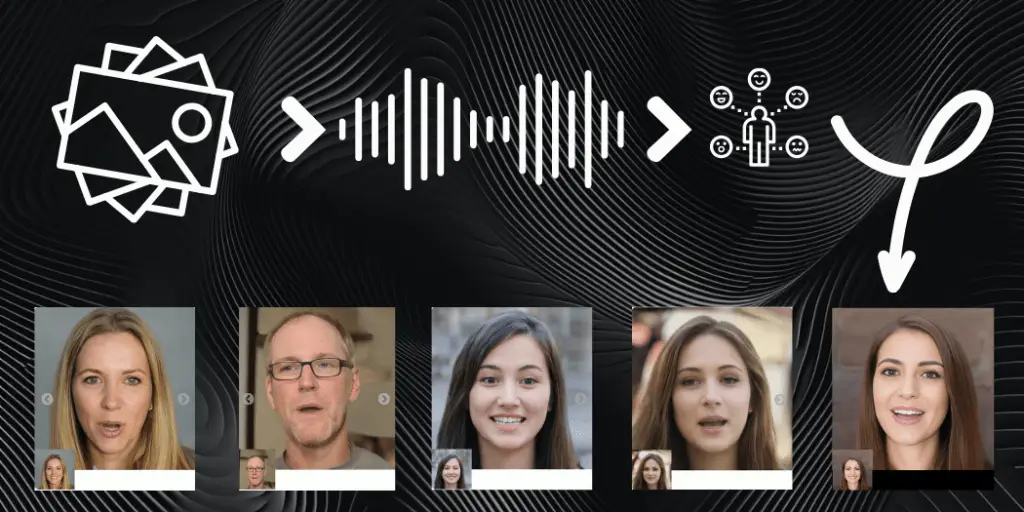

Microsoft Research Asia recently introduced an innovative AI technology known as VASA-1, marking a significant advancement in synthetic media.

VASA-1, which stands for Visual Affective Skills Animator, can generate animated videos from a single photograph and an audio track.

This technology simulates realistic facial expressions and lip movements in sync with the audio, and it does so with impressive realism and efficiency.

As we examine VASA-1’s capabilities and applications, we also consider its ethical implications and potential uses.

Microsoft’s VASA-1 Digital Media

VASA-1, developed by Microsoft Research Asia, represents a breakthrough in audio-driven animation technologies.

This AI model, short for Visual Affective Skills Animator, is designed to animate static images into lifelike videos by syncing them to an audio track.

VASA-1 utilizes advanced machine-learning techniques to analyze a static photo and a speech audio clip. It then generates a video where the subject’s facial expressions, head movements, and lip movements are perfectly synced to the audio.

This process involves complex algorithms that can detect and mimic human-like expressions and subtle nuances in behavior, significantly enhancing the animated figures’ realism.

Unlike some other models, which may also include voice cloning, VASA-1 focuses solely on the visual aspects and relies on pre-recorded audio tracks.

This distinct approach ensures precise synchronization between audio and visual elements, making the animated character appear to speak or sing naturally according to the audio instructions.

The model can manipulate various facial features independently, allowing it to express a wide range of emotions and reactions that correspond to the tone and context of the audio.

The model is optimized for high performance. It can generate videos at a resolution of 512×512 pixels and up to 40 frames per second with minimal latency.

These specifications make VASA-1 suitable for real-time applications like video conferencing or live-streaming avatars. Its efficiency significantly improved over earlier speech animation methods, often needing help with realism and expressiveness.

One of VASA-1’s key innovations is its use of the VoxCeleb2 dataset, which includes over a million utterances from thousands of celebrities extracted from YouTube videos.

This extensive training has enabled the AI to understand a wide array of human expressions and accents, further improving its ability to realistically animate photos of diverse individuals.

While VASA-1 marks a considerable advancement in AI-driven animation, the developers acknowledge that it still contains some identifiable artifacts that can distinguish the animations from actual human actions.

The ongoing development aims to minimize these discrepancies to achieve an authenticity that matches real-life videos.

VASA-1’s technical framework sets a new standard in lifelike digital animations, offering high-quality output and operational efficiency. This makes it a promising tool for various applications, even as it evolves and improves.

Development and Training of VASA-1

The development and training of VASA-1, a state-of-the-art AI model from Microsoft Research Asia, involves sophisticated processes and a substantial dataset to achieve its impressive capabilities.

VASA-1 was conceived to enhance the realism and efficiency of audio-driven facial animation.

The team focused on developing a system that could provide lifelike animations from a single photograph paired with an audio track, overcoming the limitations of previous technologies that often resulted in less expressive and less realistic animations.

A critical component in VASA-1’s training is the VoxCeleb2 dataset. Compiled by researchers at the University of Oxford in 2018, this dataset is a large-scale collection of over 1 million spoken utterances from 6,112 celebrities extracted from YouTube videos.

The diversity of the dataset, featuring various accents, vocal nuances, and facial expressions, provides a rich source of data for training the AI to understand and replicate human-like expressions accurately.

VASA-1 employs advanced machine learning algorithms and intense learning techniques to analyze and learn from the VoxCeleb2 dataset.

These algorithms enable the model to detect subtle facial movements and changes in expression associated with speech.

By training on diverse faces and voices, VASA-1 can adapt to new images and audio tracks it has never encountered before, making it highly versatile.

The model is specifically optimized for real-time performance and can generate high-resolution animations at up to 40 frames per second with minimal latency. This is particularly significant for applications requiring instant feedback, such as interactive virtual avatars or real-time video enhancements.

During its development, the team faced challenges in achieving seamless lip synchronization and naturalistic eye movements. These issues were addressed through iterative training and model refining, using quantitative metrics and qualitative assessments to improve performance.

Given the potential misuse of such technology, the training process also incorporated ethical considerations.

The developers ensured the model was trained on publicly available data and focused on general capabilities rather than person-specific mimicry. This was part of a broader effort to balance innovation with responsibility in deploying AI technologies.

The ongoing development of VASA-1 includes efforts to further reduce the presence of artifacts in the generated videos and to bridge the gap toward achieving perfect lifelike authenticity.

The team continues to refine the model, incorporating feedback from real-world applications and new research findings to enhance its functionality and application scope.

The development and training of VASA-1 highlight a significant leap forward in AI-driven media creation. This leap is backed by robust training methods and a commitment to ethical AI practices. This groundwork paves the way for future advancements and broader technology applications.

Potential Applications and Demonstrations of VASA-1

Microsoft’s VASA-1 technology, with its advanced capability to animate still photos using audio inputs, opens up many potential applications across various sectors.

Below, we explore some promising applications and demonstrations that showcase the versatility and potential of this technology.

Microsoft has posted videos on its research page where VASA-1 animates photos to sync with pre-recorded audio tracks.

These include people speaking or singing, demonstrating the AI’s ability to handle different vocal pitches and speech patterns.

The demonstrations highlight VASA-1’s ability to modify facial expressions and eye gaze, adjusting them to reflect the mood conveyed by the audio, such as happiness, sadness, or anger.

In one of the more creative applications, VASA-1 animated the Mona Lisa, allowing her to rap to a modern audio track.

This shows the technology’s cultural adaptability and potential to create engaging educational and entertainment content.

VASA-1 could revolutionize the creation of digital avatars and virtual assistants by providing realistic, human-like facial animations that react in real-time to voice commands or queries.

In the entertainment industry, VASA-1 could produce animated characters for films, video games, and virtual reality, reducing costs and production times while increasing the realism of digital characters.

VASA-1 can create interactive educational content where historical figures or book characters come to life, speaking and interacting with students engagingly.

VASA-1 could animate avatars in real time for teleconferencing, allowing participants to engage more naturally without the need for high-quality video feeds, thereby reducing bandwidth requirements.

This technology could also create more dynamic and inclusive communication aids for people with speech or hearing impairments, providing real-time animated sign language interpreters.

In therapy, VASA-1 could help create virtual companions or therapists that provide support with realistic emotional responses, potentially making virtual treatment more accessible and less daunting.

While exploring these applications, considering the ethical implications, particularly regarding privacy and consent, is essential.

The potential for misuse in creating non-consensual videos of individuals highlights the need for strict regulations and guidelines to govern the deployment of such technologies.

As VASA-1 continues to be refined, its applications are likely to expand further, integrating more seamlessly with various technologies and becoming a staple in industries ranging from education to entertainment.

Microsoft’s ongoing development and demonstration of VASA-1 highlight its current capabilities and hint at the future potential of AI-driven animation technologies.

Ethical Considerations and Privacy Concerns of VASA-1

The development and deployment of VASA-1, Microsoft’s AI technology capable of animating still photos with audio, bring several ethical considerations and privacy concerns to the forefront.

These issues are pivotal in guiding how such technology is utilized, ensuring it benefits society while mitigating potential harms.

One of the most significant concerns is the potential misuse of VASA-1 to create deepfake content. Deepfakes can be used to

Impersonate individuals in videos without consent, potentially damaging reputations or misleading audiences.

Fabricate misleading media, contributing to the spread of disinformation, which can have wide-reaching effects on public opinion and political landscapes.

Harass or blackmail individuals by placing them in compromising or false scenarios.

Using photos of individuals without consent to create videos infringes on personal privacy and autonomy. The accessibility of images online increases the risk that someone’s image could be misused without their knowledge or permission.

AI-generated examples: For privacy reasons, demonstrations on their research page use images generated by other AI models like StyleGAN2 or DALL-E 3, avoiding using real individuals’ likenesses without consent.

Microsoft emphasizes that the technology is intended for positive applications such as education, accessibility, and entertainment, not for impersonating real individuals.

The potential impacts of technologies like VASA-1 on society necessitate robust regulatory frameworks to prevent harmful uses while promoting beneficial applications.

Legal frameworks govern the use of synthetic media, defining clear lines around consent, usage rights, and privacy.

Transparency requirements for content created with AI, possibly requiring labels on AI-generated media to inform viewers of its synthetic nature.

Development of detection technologies to identify deepfake content, helping to mitigate the spread of false information.

Balancing innovation with responsibility: Ensuring that advancements in AI benefit society without eroding ethical standards or personal freedoms.

Equipping people with the knowledge to recognize AI-generated content and understand its implications.

While VASA-1 showcases remarkable capabilities in animating photos with realistic precision, its application must be approached carefully, considering ethical and privacy concerns.

Establishing stringent ethical guidelines and robust regulatory frameworks will be crucial in shaping the future of such transformative technologies, ensuring they are used responsibly and for the greater good.

Future Prospects and Limitations of VASA-1

Microsoft’s VASA-1 represents a significant advancement in AI-driven animation, offering intriguing possibilities and posing considerable challenges.

Future Prospects

VASA-1 could revolutionize character animation, reducing the costs and time required for producing animated content. It could also enable novel forms of interactive storytelling in which characters respond dynamically to audience input.

Integrating VASA-1 with VR and AR could enhance user experiences by creating more realistic avatars or virtual assistants interacting with users in real time.

AI avatars powered by VASA-1 could provide a more personalized interaction in customer service applications, making digital encounters feel more human-like.

As the technology matures, we might see more sophisticated real-time applications, such as live translations with avatars mimicking the speaker’s facial expressions in different languages, enhancing communication across language barriers.

VASA-1 could be used to create educational tools that cater to diverse learning needs, including generating sign language interpreters for the hearing impaired or reading assistants for the visually impaired.

Despite significant advancements, the videos generated by VASA-1 still contain some artifacts and need more complete authenticity compared to actual human actions. Further research and development are required to close this gap.

The quality and diversity of the training data directly affect the model’s output. Biases in the dataset could lead to a less accurate representation of minority groups or non-standard accents.

The ability to create realistic videos from static images raises concerns about creating deepfakes, which could be used for malicious purposes such as misinformation, impersonation, or fraud.

As laws and regulations evolve to keep up with technological advancements, VASA-1 must adhere to stricter data privacy, consent, and transparency guidelines.

While VASA-1 can streamline and enhance certain processes, it also risks displacing jobs in industries like animation and customer support, where human roles could be supplanted by automated systems.

Building public trust in AI technologies like VASA-1 is essential, especially as their applications become more integrated into daily life. Transparency about capabilities and limitations and clear communication about using AI-generated content are crucial.

The ongoing development of VASA-1 and similar technologies will likely focus on enhancing AI-generated animations’ realism and ethical deployment.

Researchers and developers will need to collaborate closely with ethicists, policymakers, and the public to navigate the complex opportunities and challenges presented by this technology.

As VASA-1 evolves, its potential to innovate across various sectors will continue to grow, underscoring the need for a balanced approach that maximizes benefits while mitigating risks.

Broader Context and Comparisons of VASA-1

Microsoft’s VASA-1 is a notable entry into the rapidly evolving field of AI-driven animation technologies.

It is beneficial to compare it with similar technologies and consider the broader context of AI development in media and communication to fully appreciate its position and potential impact.

This comparison highlights the unique aspects of VASA-1 and illuminates the shared challenges and opportunities that these technologies face.

Like VASA-1, EMO animates static images based on audio inputs to create lifelike videos.

EMO may focus more on emotional expressions and could be tailored for specific customer service or entertainment applications, whereas VASA-1 emphasizes real-time performance and high fidelity across various expressions and scenarios.

These technologies, including those used for deepfake creation, use similar principles of machine learning and data-driven animation to generate convincing video content.

VASA-1 is designed to synchronize audio with realistic facial movements, not just replicating appearances. This offers a more targeted application in scenarios where audio-visual synchronization is critical.

These models also generate high-quality images and have been instrumental in advancing the field of synthetic media.

While StyleGAN and DALL-E excel in creating static images, VASA-1 extends this by adding motion and synchronization with audio, moving beyond static art to interactive media.

As AI technologies capable of creating and manipulating media become more sophisticated, ethical considerations and governance become increasingly important. VASA-1’s development reflects a growing awareness of these issues, focusing on preventing misuse and ensuring privacy.

The ongoing improvements in neural networks, data processing, and model efficiency contribute to rapidly developing technologies like VASA-1. These advancements allow for more complex and nuanced applications, pushing the boundaries of what AI can achieve in media production.

The introduction of technologies like VASA-1 also impacts public perception of AI and raises the importance of media literacy. Understanding the capabilities and limitations of AI-generated media is crucial as these technologies become more prevalent in everyday life.

The development of VASA-1 and similar technologies will likely influence future AI and digital media regulations. These include digital content authenticity laws, the use of personal data in training AI, and the ethical deployment of synthetic media.

As AI-generated media becomes more common, it will continue to shape cultural and social norms around communication, entertainment, and information dissemination.

The ability to create lifelike animations of historical figures, celebrities, or fictional characters can enrich cultural experiences but also requires careful management to avoid distorting historical truths or misleading audiences.

While VASA-1 represents a significant technological achievement with unique features and applications, it also shares many challenges and opportunities inherent in the broader field of AI-driven media.

The continued evolution of these technologies will necessitate thoughtful consideration of their ethical, social, and regulatory implications.

Final Thoughts

Microsoft’s VASA-1 exemplifies the remarkable strides in AI-driven animation, showcasing both the technological prowess and the potential ethical dilemmas inherent in such advancements.

As an innovative tool capable of animating static images with synchronized audio, VASA-1 offers a glimpse into a future where digital media can be more interactive, personalized, and engaging.

VASA-1’s ability to produce lifelike animations from a single photo and an audio track holds tremendous potential across various sectors, including entertainment, education, accessibility, and customer service.

These applications demonstrate the positive impact of sophisticated AI tools on society, enhancing experiences and creating new opportunities for interaction and learning.

The technology also raises significant challenges, particularly in terms of privacy, ethical use, and potential misuse.

The ease with which realistic videos can be generated makes establishing robust ethical guidelines and strict regulatory frameworks imperative. These measures are crucial to prevent harm, such as spreading misinformation or creating unauthorized digital replicas of individuals.

As we look forward, the development of VASA-1 and similar technologies will likely continue to evolve, driven by further advancements in AI and machine learning.

The dialogue around these developments must remain open and inclusive, involving ethicists, regulators, technologists, and the public to ensure that these powerful tools are used responsibly and for the benefit of all.

VASA-1 is not just a technological achievement but a catalyst for broader discussions about AI’s role in our future society. Balancing innovation with ethical responsibility will be vital to navigating AI-generated media’s exciting yet challenging landscape.