Meta Five AI Models, developed by Meta’s Fundamental AI Research team, these models encompass a wide range of capabilities, from multi-modal processing to music generation and AI-generated speech detection.

Meta Five AI Models

Meta’s AI initiatives are spearheaded by the Fundamental AI Research team, a group dedicated to advancing artificial intelligence through open research and collaboration.

Established to push the boundaries of what AI can achieve, the FAIR team has consistently delivered cutting-edge research that addresses complex problems and fosters innovation.

Meta’s approach emphasizes the importance of working with the global AI community. By publicly sharing their research and tools, Meta aims to inspire iterations and improvements that can lead to responsible and beneficial advancements in AI technology.

This collaborative mindset is central to Meta’s strategy, as they believe that the collective efforts of researchers worldwide are crucial for AI’s ethical and practical development. The recent release of five new AI models exemplifies this philosophy.

These models demonstrate significant technical achievements and highlight Meta’s commitment to addressing critical issues such as efficiency in language model training, diversity in AI-generated content, and the detection of AI-generated media.

By focusing on both innovation and responsibility, Meta aims to set a standard for developing and applying artificial intelligence.

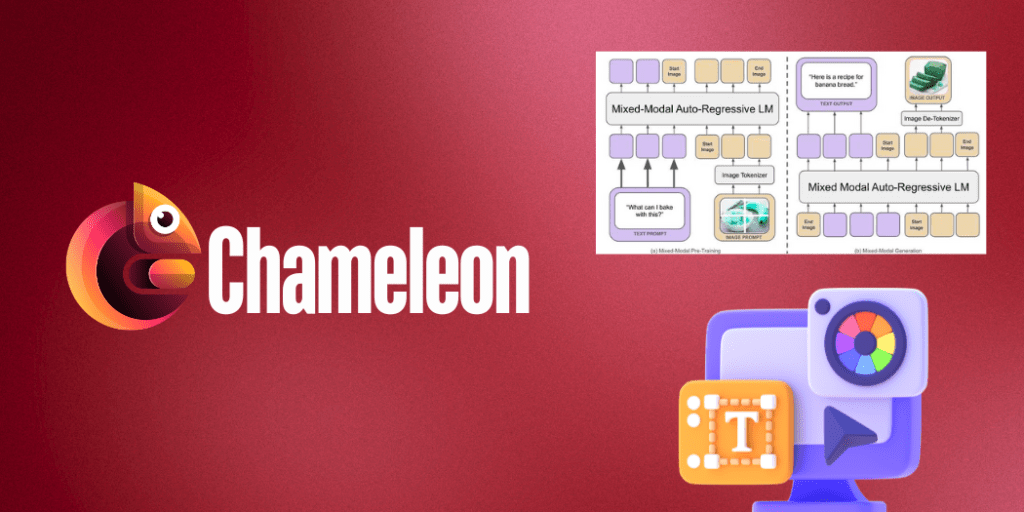

Chameleon Multi-Modal

Among the most notable releases from Meta’s recent AI initiatives is the Chameleon model, a groundbreaking advancement in multi-modal text and image processing.

Unlike traditional AI models that handle text and images separately, Chameleon is designed to simultaneously understand and generate text and images. This capability mirrors human cognitive functions, where we can process and interpret visual and textual information at the same time.

Chameleon represents a significant leap in AI technology, offering versatility in its applications. It can take any combination of text and images as input and produce any combination of text and pictures as output.

For instance, it can generate creative captions for photos, create detailed descriptions from visual inputs, or even combine both to develop new scenes based on given text and images.

This multi-modal approach opens up many possibilities for industries, from digital content creation to automated visual storytelling.

Meta explains that the ability to process and deliver text and images concurrently allows Chameleon to be utilized in innovative ways previously unattainable with unimodal models.

The potential use cases are vast, encompassing everything from enhancing user interactions in social media to providing more sophisticated tools for education and training.

By releasing Chameleon under a research license, Meta aims to foster further exploration and development within the AI community.

They hope that by sharing this advanced technology, others will build upon it, leading to new applications and improvements that benefit a wide range of fields. This collaborative effort aligns with Meta’s broader mission of advancing AI responsibly and inclusively.

Multi-Token Prediction

Meta’s new multi-token prediction technology addresses a fundamental inefficiency in traditional language model training. Typically, language models predict the next word in a sequence one at a time.

While this approach is straightforward and scalable, it is also highly inefficient. It requires massive amounts of text data to achieve a level of language fluency comparable to human learning, which can be both time-consuming and resource-intensive.

The multi-token prediction model, however, revolutionizes this process by predicting multiple future words simultaneously.

This advancement allows the model to learn and generate language patterns much more efficiently. By processing several words at once, the model can accelerate the training process, significantly reducing the amount of data and time needed to achieve high levels of fluency and accuracy.

Meta has released these pretrained models for code completion under a non-commercial research license, highlighting their commitment to advancing AI technology through open collaboration.

The multi-token models have the potential to dramatically improve the efficiency of language model training, making it faster and more effective.

This technology can have far-reaching implications across various applications. For instance, it can enhance natural language processing tasks such as machine translation, text generation, and conversational AI. Developers can use these models to create more responsive and intelligent applications that require less computational power and training time.

By focusing on improving the efficiency of language model training, Meta aims to contribute to the broader AI community’s efforts to develop more advanced and accessible AI technologies.

The multi-token prediction approach not only addresses current limitations but also paves the way for future innovations in NLP and beyond.

JASCO Enhanced Text-to-Music

Meta’s JASCO model represents a significant advancement in text-to-music generation. This innovative model allows for creating music clips from text inputs, offering control and versatility previously unattainable.

Unlike existing models like MusicGen, which rely mainly on text inputs to generate music, JASCO can accept a variety of inputs, including chords and beats, providing users with more detailed control over the resulting music.

One of JASCO’s key features is its ability to interpret and incorporate these additional inputs to produce more complex and refined musical compositions.

This feature is precious for musicians and composers who seek to generate music that aligns closely with their creative vision. For example, a user can input specific chords and rhythms alongside descriptive text to guide the model in producing music that fits a particular mood or style.

JASCO’s capabilities extend beyond simple music generation. It can be used in various creative and practical applications, such as composing video background scores, creating personalized music tracks for gaming environments, and even assisting in the songwriting process by providing a foundation for artists to build.

This level of integration and control makes JASCO a powerful tool for amateur and professional musicians.

By offering JASCO under a research license, Meta encourages further exploration and innovation within AI-generated music.

They hope the AI community will build upon this technology, leading to new and exciting applications that push the boundaries of what AI can do in music creation.

The development of JASCO underscores Meta’s commitment to enhancing creative tools through AI, providing users with sophisticated technologies that empower artistic expression.

This approach not only enriches the field of music generation but also opens up new possibilities for how AI can foster creativity and innovation in the arts.

AudioSeal Detecting AI-Generated

Meta’s AudioSeal represents a pioneering advancement in the detection of AI-generated speech. As generative AI tools become increasingly sophisticated, the potential for misuse, such as creating deepfake audio or spreading misinformation, has grown.

AudioSeal addresses this challenge by providing a reliable method to detect AI-generated speech within audio clips.

AudioSeal is the first audio watermarking system specifically designed for this purpose. It works by embedding unique, identifiable markers within AI-generated speech, which can later be detected to verify the authenticity of the audio.

This technology allows for precisely identifying AI-generated segments within larger audio clips, up to 485 times faster than previous methods. The release of AudioSeal under a commercial license highlights its practical applications in various fields.

For instance, media companies can use AudioSeal to ensure the integrity of audio content, preventing the distribution of manipulated or fake audio. Law enforcement agencies might employ this technology to verify the authenticity of audio evidence.

Social media platforms could integrate AudioSeal to detect and flag AI-generated content, helping to combat misinformation and protect users from deceptive practices.

Meta’s development of AudioSeal is part of a broader commitment to responsible AI research and development. By providing tools to detect and mitigate the misuse of generative AI, Meta aims to foster a safer and more trustworthy digital environment.

AudioSeal helps maintain the integrity of audio content and sets a precedent for the ethical use of AI technologies.

The impact of AudioSeal extends beyond its immediate applications. It represents a step forward in the ongoing effort to balance the benefits of AI innovation with the need for safeguards against its potential risks.

By encouraging the responsible use of AI, Meta continues to lead in setting ethical AI development and deployment standards.

Text-to-Image Diversity

One of Meta’s recent initiatives aims to improve the diversity and representation in text-to-image models, addressing a critical issue in AI-generated content.

Text-to-image models generate images based on textual descriptions and often exhibit geographical and cultural biases.

These biases can lead to a lack of representation for specific regions and cultures, perpetuating stereotypes and limiting the inclusivity of AI-generated content.

Meta has taken significant steps to tackle these biases by developing automatic indicators that evaluate potential geographical disparities in text-to-image models.

These indicators help identify and measure the extent to which certain regions and cultures are underrepresented or misrepresented in the generated images.

Meta conducted a large-scale annotation study involving over 65,000 annotations to further understand and address these biases.

This study gathered diverse perspectives on geographic representation, providing valuable insights into how different people perceive and evaluate the inclusivity of AI-generated images.

The findings from this study have been instrumental in developing more diverse and representative text-to-image models.

Meta has publicly released the results of this research, including the relevant code and annotations. This transparency allows other researchers and developers to utilize these resources to improve the diversity of their own models.

By sharing these tools and insights, Meta aims to foster a more inclusive approach to AI development across the industry.

Improving diversity in text-to-image models has far-reaching implications. It can enhance the quality and inclusivity of AI-generated content in various applications, from digital art and entertainment to educational materials and marketing.

By ensuring that AI systems represent a more comprehensive range of cultures and perspectives, Meta contributes to a more equitable and inclusive digital landscape.

This initiative reflects Meta’s broader commitment to responsible AI development. Meta sets a standard for ethical AI practices that prioritize inclusivity and representation by addressing biases and promoting diversity.

This approach improves the technology and ensures that it serves a broader and more diverse audience. Meta’s latest AI advancements highlight their commitment to innovation and responsible AI development.

The introduction of models like Chameleon, multi-token prediction, JASCO, and AudioSeal, alongside efforts to improve text-to-image diversity, showcases their focus on enhancing efficiency, creativity, and inclusivity in AI technology.

By openly sharing these breakthroughs, Meta aims to inspire further research and collaboration within the AI community, driving the development of advanced and ethical AI solutions.