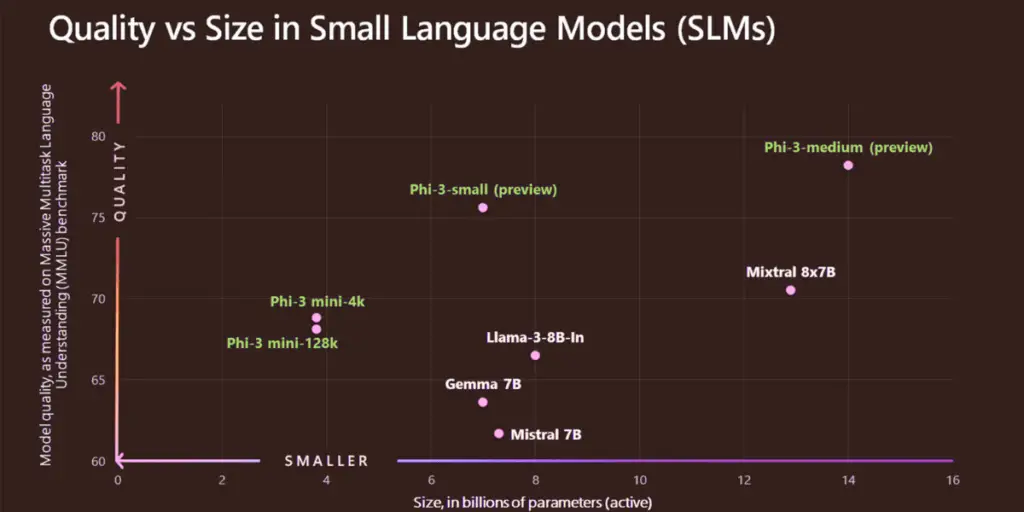

Microsoft has introduced its latest innovation, the Phi-3 and Phi-3-mini language models. These new Microsoft’s Small Language Models mark a strategic pivot toward developing smaller, more accessible AI tools that promise to revolutionize user engagement and functionality across various sectors.

The launch event, headlined by key Microsoft figures including Sonali Yadav and Sebastien Bubeck, introduced these small language models as cost-effective solutions tailored to organizations with limited resources.

With these models running locally, even on devices like smartphones, Microsoft is broadening the reach of AI and empowering users in unprecedented ways.

Microsoft’s Small Language Models

Microsoft has made a strategic leap by introducing two new small language models, Phi-3 and Phi-3-mini. These models represent a pivotal shift in Microsoft’s approach to AI, moving towards more specialized, accessible, and cost-effective solutions.

The release of these models is not merely an addition to Microsoft’s impressive suite of technological offerings but a statement of intent towards creating a more inclusive AI future.

Phi-3-mini, the more compact version, was officially unveiled on April 24, 2024. It is the initial release in a trio of SLMs planned by Microsoft, each designed to cater to different user needs and computational environments.

Phi-3-mini, in particular, is designed for efficiency and affordability, making it an attractive option for smaller businesses and individual developers who might previously have found AI integration daunting due to cost and complexity.

Both models are engineered to perform well in environments with limited computational resources. They can run locally on devices like smartphones or laptops without constant cloud connectivity.

This local deployment capability is crucial for users in remote or network-constrained environments, enabling them to leverage AI’s power without traditional barriers.

The launch of these models underscores Microsoft’s commitment to broadening AI accessibility. By allowing these models to operate independently of the cloud, Microsoft ensures that even users in the most remote areas can benefit from AI technology.

A rural farmer could use a Phi-3-mini-powered app to analyze crop health on the spot, making immediate and informed decisions without relying on distant cloud servers.

A standout feature of the Phi-3-mini is its cost-effectiveness. Microsoft claims that the Phi-3-mini offers a substantial cost reduction, up to tenfold compared to its competitors, without compromising performance.

This dramatic decrease in cost opens up new possibilities for smaller enterprises and startups to integrate advanced AI capabilities into their operations without the financial strain typically associated with such technologies.

The Phi-3 and Phi-3-mini are poised to become fundamental components of Microsoft’s AI strategy, reflecting a broader shift towards a portfolio of models catering to diverse scenarios and needs.

This strategic move enhances Microsoft’s product offerings. It sets a new standard for what is possible with accessible, efficient AI technology.

Benefits and Features of Phi-3-mini

Microsoft’s Phi-3-mini, the latest addition to its array of artificial intelligence models, is designed to bring many benefits and features that make advanced AI technology more accessible and practical for many users.

Tailored to cater to small to medium-sized enterprises and individual developers, Phi-3-mini stands out for its efficiency, cost-effectiveness, and ease of integration.

The Phi-3-mini is not just slightly cheaper—it offers a dramatic reduction in costs, up to ten times less expensive than comparable models available on the market.

This price point makes it particularly appealing for organizations with limited budgets but eager to integrate AI into their operations.

With running locally and not requiring substantial cloud computing resources, Phi-3-mini also helps users save on ongoing operational costs, further enhancing its economic attractiveness.

Available through Microsoft’s Azure AI model catalogue and on popular platforms like Hugging Face and Ollama, Phi-3-mini is readily accessible to developers worldwide.

Optimized for NVIDIA’s GPUs and integrated with NVIDIA Inference Microservices, Phi-3-mini ensures that users can access powerful tools to maximize the model’s performance and efficiency.

One of the most significant features of Phi-3-mini is its ability to operate offline, allowing users to deploy AI solutions in environments where internet connectivity is inconsistent or unavailable.

This model can run directly on users’ devices, from smartphones to PCs, facilitating faster processing and immediate response times without sending data to the cloud for analysis.

Phi-3-mini excels at handling more straightforward tasks that do not require deep reasoning or extensive data analysis, making it ideal for real-time applications like language translation, simple decision support, and primary content generation.

Its capability to provide quick outputs is precious when speed is crucial, such as in customer service interactions or on-the-spot diagnostics.

From agriculture to retail, the practical applications of Phi-3-mini are vast. Businesses can leverage this model to enhance customer experiences, streamline operations, and make data-driven decisions swiftly and efficiently.

As previously mentioned, a farmer could use an app powered by Phi-3-mini to instantly analyze crop health, detect diseases, and receive actionable advice on pest control, all processed locally on a handheld device.

The Phi-3-mini model is a testament to Microsoft’s commitment to democratizing AI technology, making it more attainable for businesses of all sizes and developers at all skill levels.

Small Language Models in AI Accessibility

In 2024, developing small language models like Microsoft’s Phi-3-mini is increasingly recognized as a pivotal factor in making AI more accessible.

These models are designed to address the limitations of their larger counterparts and provide practical solutions that can be employed in diverse environments where resources and connectivity are constrained.

SLMs are engineered to be user-friendly, requiring less technical expertise to deploy and maintain. This makes them ideal for organizations and individuals without extensive AI training, reducing the barrier to entry into the AI space.

Small models can be easily fine-tuned and adapted to specific tasks or industries, allowing companies to leverage AI for niche applications without extensive customization.

The development and operational costs of SLMs are significantly lower than those of large language models. This cost-effectiveness makes it feasible for small and medium-sized enterprises to adopt AI technologies, which were previously out of reach due to financial constraints.

SLMs require less computational power, allowing them to run on less advanced hardware. This is particularly beneficial for organizations in developing regions where advanced computing infrastructure may need to be more readily available.

SLMs can operate independently of the cloud, facilitating their use in remote or network-limited areas. This local processing capability is crucial for providing AI-driven solutions where internet access is sporadic or unavailable.

The ability to process data locally and in real-time enhances the functionality of AI applications, from mobile apps that provide instant translations to diagnostic tools that offer immediate feedback in medical or agricultural settings.

By processing data locally on the device, SLMs help maintain user privacy, as sensitive information does not need to be transmitted to external servers.

Reduced reliance on cloud services minimizes vulnerability to data breaches and external attacks, offering a safer environment for users to deploy AI applications.

SLMs enable a more comprehensive range of businesses and communities to implement AI solutions, fostering inclusivity in technology usage.

More organizations accessing AI can lead to more incredible innovation across industries. Businesses can harness AI for unique applications tailored to their needs and challenges, driving sector-specific advancements.

Microsoft’s Broader AI Strategy

Microsoft’s development and release of small language models like Phi-3 and Phi-3-mini are integral to a broader strategic vision to reshape the artificial intelligence landscape.

This strategy is about diversifying Microsoft’s AI offerings and embedding AI technology into the fabric of daily business operations across the globe. Here’s how these initiatives fit into Microsoft’s larger AI strategy.

By creating smaller, more cost-effective models such as Phi-3-mini, Microsoft aims to make AI accessible to a broader audience, including small to medium-sized enterprises and individual developers who may not have the resources to leverage large-scale AI solutions.

Microsoft is focused on empowering more users to employ AI by simplifying its integration and usage, lowering the skill barriers traditionally associated with implementing AI technology.

Microsoft is expanding its portfolio to include various AI models that cater to different needs and capabilities, from robust large models capable of complex reasoning to nimble small models designed for efficiency and local use.

This diversification allows customers to choose the best AI model that fits their specific scenario, whether they require high-powered analytical capabilities or simple, cost-effective solutions for everyday tasks.

Microsoft invests heavily in research and development to continually improve the effectiveness and efficiency of its AI models. This includes enhancements in model training, fine-tuning capabilities, and adapting to new technologies like quantum computing.

Microsoft collaborates with leading technological firms, startups, and academic institutions to push the boundaries of what AI can achieve. Recent investments, like the $1.5 billion into G42, an AI firm based in the UAE, underscore its commitment to fostering global innovation ecosystems.

Integrating Microsoft’s AI models with Azure Cloud services offers seamless scalability and global reach, providing robust cloud computing resources to support AI deployments at any scale.

Microsoft is also advancing its edge computing capabilities, which involve deploying AI applications at the data source. This is crucial for applications requiring immediate data processing without latency issues associated with cloud computing.

Microsoft is committed to ethical AI development, ensuring that its models are used responsibly and incorporate privacy, security, and fairness by design.

As AI becomes more integral to business and daily life, Microsoft ensures its AI solutions comply with international regulations and standards, maintaining transparency and accountability in its AI deployments.

Recognizing the diverse needs of its global user base, Microsoft focuses on localizing its AI solutions to meet regional and cultural requirements, thereby enhancing user experience and utility.

Beyond commercial interests, Microsoft aims to leverage its AI technology to address societal challenges. Which includes healthcare diagnostics, educational tools, and environmental conservation, contributing positively to communities worldwide.

Industry Implications

Microsoft’s strategic emphasis on small language models like Phi-3 and Phi-3-mini signals a transformative shift in the AI industry, with wide-reaching implications across various sectors. These developments are enhancing current applications of AI and paving the way for new innovations.

In healthcare, SLMs can support remote diagnostic tools, help manage patient data, and offer real-time treatment insights, especially in regions with limited access to healthcare facilities.

Farmers can use AI to monitor crop health, predict yields, and manage resources more efficiently, directly impacting food security and agricultural productivity.

Retailers can enhance customer service through personalized AI-driven recommendations and optimize supply chains with predictive analytics, improving customer experience and operational efficiency.

In finance, SLMs facilitate fraud detection, risk assessment, and customer service operations, allowing for safer and more personalized banking experiences.

The affordability and accessibility of SLMs lower the entry barriers for small businesses and startups, enabling them to adopt AI without significant investment in IT infrastructure.

Smaller companies can innovate rapidly with AI, competing more effectively with larger enterprises and potentially disrupting traditional market dynamics.

As AI tools become more widespread, the demand for AI literacy and related skills will increase across job sectors, necessitating updated training and education systems.

Contrary to concerns about AI replacing jobs, integrating SLMs may create new roles, particularly in managing and maintaining AI systems, data analysis, and sectors where human-AI collaboration is beneficial.

As AI becomes more embedded in daily activities, ensuring the privacy and security of data processed by these models becomes crucial.

There is a growing need for guidelines and regulations to ensure the ethical use of AI, preventing biases and ensuring that AI decisions are fair and transparent.

The AI landscape is expected to evolve, with SLM becoming more sophisticated and capable of handling increasingly complex tasks.

The integration of AI with emerging technologies like quantum computing could exponentially increase processing capabilities and enable breakthroughs in fields such as materials science and complex system simulation.

Final Thoughts

Microsoft’s Phi-3 and Phi-3-mini models may mark a significant milestone in artificial intelligence, underscoring a strategic shift towards more accessible, efficient, and versatile AI solutions.

These small language models are designed to democratize AI technology, making it available and affordable for a broader range of users—from large corporations to small businesses and individuals in remote areas.

The implications of such advancements are profound, spanning various industries, including healthcare, agriculture, retail, and finance.

When lowering the barriers to entry and reducing the reliance on heavy computational resources, Microsoft is not only enhancing the capabilities of existing sectors. Still, it is also paving the way for innovative applications that were previously unimaginable.

The continued evolution of AI technologies promises to bring even more sophisticated tools into the mainstream, further transforming how businesses operate and how services are delivered across the globe.

The focus on ethical considerations and the development of regulations will play a crucial role in ensuring that the benefits of AI are realized equitably and responsibly.