In May 2024, at the annual Google I/O developer conference, Google Released Project Astra, an AI initiative poised to redefine the landscape of digital assistants.

Google’s DeepMind CEO Demis Hassabis touted Project Astra as “the future of AI assistants.” It is designed to be a universal agent capable of assisting users with myriad everyday tasks through enhanced interaction capabilities.

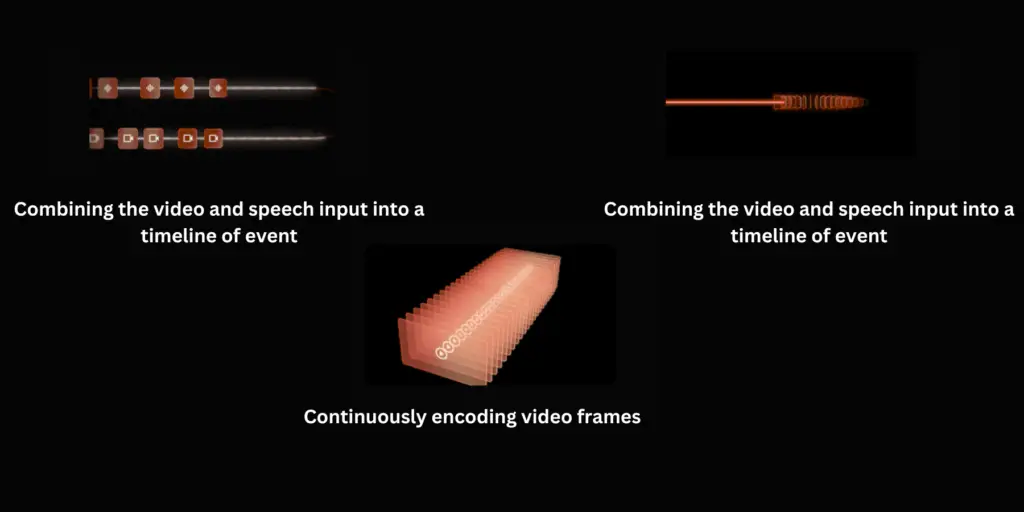

Unlike traditional AI assistants, which primarily rely on voice or text inputs, Astra integrates multiple data input and output forms—including text, audio, images, and video.

This allows it to perform tasks with unprecedented context and precision, from identifying objects in real time to providing dynamic responses based on continuous input and environmental interaction.

Project Astra

At the core of Project Astra is Google’s Gemini Ultra model, an advanced AI system that enhances the assistant’s ability to process and understand complex data.

This integration allows Astra to perform sophisticated tasks such as real-time object recognition, contextual understanding, and personalized interactions based on user preferences and history.

Project Astra is a “multimodal” AI, meaning it can process and generate responses using various data types—text, audio, images, and video.

This ability enhances its utility in diverse scenarios, from helping users identify objects with their camera to engaging in meaningful dialogue using voice and visual cues.

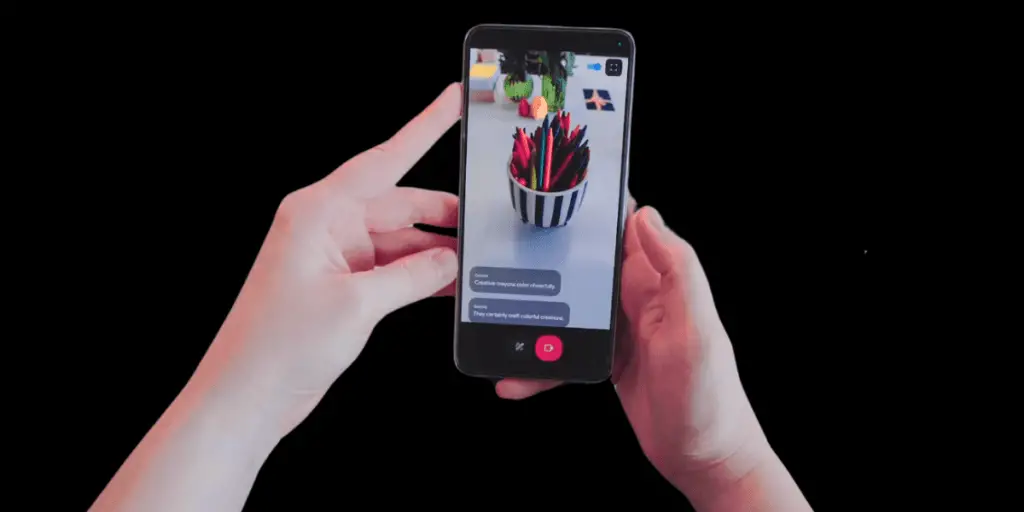

Utilizing similar technology to what powers Google Lens, Astra can instantly recognize objects and provide relevant information or actions based on what it sees.

This feature is handy in scenarios where visual context is crucial, such as navigating new environments or learning about unfamiliar items.

Unlike traditional AI assistants with limited short-term memory, Project Astra boasts enhanced memory capabilities.

This allows it to remember interactions over a longer duration, recognize patterns in user behavior, and provide more personalized and context-aware responses.

Project Astra is designed to respond to user queries and anticipate needs based on the context it gathers from its surroundings and previous interactions.

This proactive approach can significantly enhance the user experience by making the assistant more intuitive and helpful in everyday tasks.

Google has also focused on improving the sound of Astra’s voice to make it more natural and pleasant to interact with.

Users can choose from various voices for a more personalized and comfortable interaction experience.

Astra’s capabilities are not limited to smartphones or computers; it is also designed to integrate seamlessly with smart glasses and other wearable devices.

Highlights at Google I/O

Google created a highly controlled demo zone at I/O 2024 to showcase Project Astra’s capabilities. Journalists and attendees were allowed entry in small groups to ensure each person could experience Astra’s features firsthand.

The setup included a large screen and a camera, enabling real-time interaction between the AI and the physical objects presented.

Using a combination of voice, visual data, and textual input, Astra demonstrated how it could seamlessly integrate various forms of communication to provide a cohesive user experience.

This was particularly evident in how Astra could continue stories or identify objects based on the inputs received from the surrounding environment.

One of the standout modes demonstrated was the “Storyteller,” where Astra could create engaging narratives based on objects in front of the camera.

As objects were introduced or changed, Astra adapted the story in real-time, adding depth and characters based on the visual input, showcasing its advanced narrative generation capabilities.

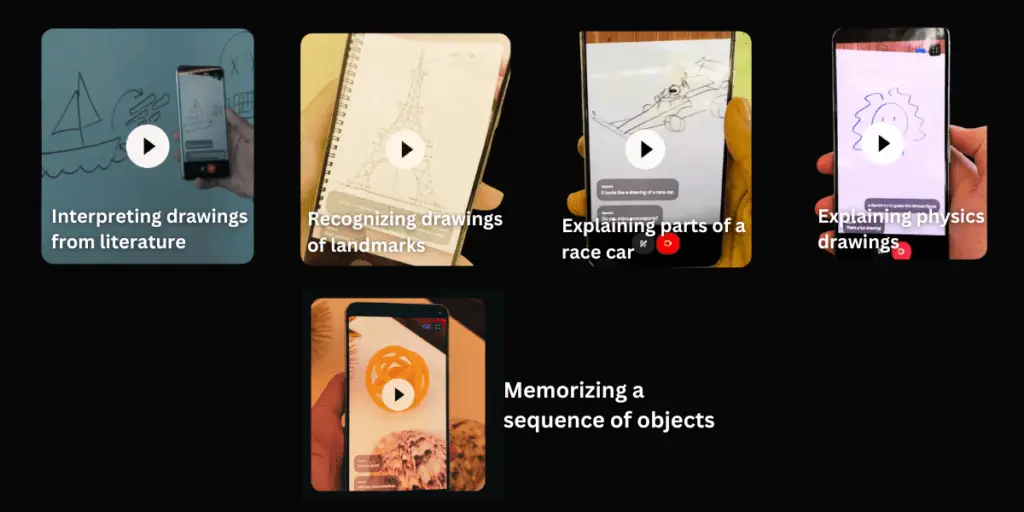

In “Pictionary” mode, Astra displayed its ability to interpret and recognize drawings, regardless of the participant’s artistic skill.

This mode highlighted its image recognition skills and capability to engage interactively with the user, guessing the objects being drawn and responding accordingly.

The “Free-Form” mode allowed for more open-ended interactions, demonstrating Astra’s ability to handle and conversationally respond to spontaneous user inputs.

“Alliteration” mode showcased its linguistic creativity, generating content that adhered to stylistic constraints, further emphasizing its advanced language processing abilities.

Throughout the demos, Astra’s real-time processing and feedback capabilities were evident. It could adjust its responses based on the ongoing interaction dynamics, showing the AI’s adaptability and potential for personalized interaction.

The demo also aimed to showcase how natural and human-like Astra could communicate. Unlike traditional AI systems that often feel mechanical, Astra held conversations that felt more fluid and intuitive, akin to talking to a human assistant.

Comparison with Existing Technologies

Astra’s ability to analyze and react to live video streams significantly surpasses the capabilities of existing voice-only Assistants

These devices leverage cameras to analyze surroundings and offer contextual information. However, they typically suffer from slow response times and limited functionality.

Project Astra’s use of advanced AI models allows quicker, more accurate recognition and interaction, making it more effective in real-time scenarios.

Most current AI technologies have limited short-term memory, restricting their ability to offer personalized and context-aware interactions over time.

Project Astra introduces enhanced memory capabilities, allowing it to remember past interactions and understand context more deeply.

This can significantly improve user experience by making the assistant more proactive and intuitive.

While some existing technologies integrate with wearables, they often do so with significant limitations.

Project Astra’s potential integration into Google Glass or similar devices could revolutionize how we interact with our environment by offering hands-free assistance that is both intelligent and seamlessly integrated into our daily routines.

Google has made specific enhancements to the sound of Astra’s voice to make it more natural and pleasant, providing options to switch between different voices.

This feature aims to bridge the gap between human and machine interaction, a subtle yet crucial aspect many current AI technologies struggle to perfect.

Unlike many AI agents that react primarily to direct queries, Project Astra is designed to be proactive.

It can teach itself from ongoing interactions and initiate actions based on accumulated knowledge. This feature sets it apart from more reactive systems, pushing the boundary towards a more autonomous, helpful AI.

Potential Applications

Project Astra can be integrated into smart home systems to provide real-time assistance with tasks such as identifying items in the refrigerator, suggesting recipes based on available ingredients, or helping with household chores by controlling other smart devices.

With its enhanced memory capabilities, Astra could remember essential dates, preferences, and routines, offering reminders and suggestions tailored to the user’s lifestyle.

Astra could transform workplace productivity by managing schedules, transcribing meetings in real-time, or providing instant information retrieval during presentations or brainstorming sessions.

Astra could assist healthcare professionals in medical settings by pulling up patient records, displaying medical imaging, or providing real-time data analysis to support diagnoses.

By utilizing its multimodal capabilities, Astra could offer a more interactive and engaging learning experience.

It could also aid in the visual and auditory presentation of educational material, which could be particularly beneficial in special education or language learning.

Astra’s ability to recognize and interpret real-time environmental data can assist individuals with visual or hearing impairments, helping them navigate spaces more safely and independently.

Project Astra has the potential to be integrated into wearable technologies like a revamped Google Glass, offering users a hands-free, augmented reality interface where information is overlaid directly onto the real world, enhancing daily interactions and tasks.

Astra could provide real-time health monitoring and fitness coaching integrated into devices like smartwatches, using its data processing capabilities to offer personalized advice and alerts.

Astra could recognize signs of emergencies or hazards in public safety scenarios, provide real-time alerts and information to users and authorities, and enhance response times and situational awareness.

Challenges and Limitations

As Project Astra relies heavily on collecting and analyzing vast amounts of personal data, including real-time video and audio, privacy concerns are paramount. Ensuring this data is handled securely and transparently is critical to maintaining user trust.

The capabilities of Astra, especially in terms of its surveillance potential, could lead to misuse if not properly regulated. There need to be strict guidelines and oversight to prevent privacy invasions.

While Astra promises high object recognition and interaction accuracy, ensuring consistent performance across diverse environments and scenarios remains a significant challenge. This includes processing dialects, speech accents, and visual input variations accurately.

Despite advancements, Astra may still face difficulties fully understanding context or intent, particularly in complex human interactions requiring nuanced emotional intelligence.

It is crucial to ensure that Astra operates without bias, especially given its deep learning foundations. Bias in training data can lead to discriminatory or unethical AI behavior, which must be vigilantly monitored and corrected.

There is a risk of increased dependence on AI for everyday decisions and tasks, potentially diminishing human skills and decision-making capabilities.

Astra’s advanced capabilities might require specific hardware features, which could limit its accessibility or increase users’ costs.

Integrating Astra seamlessly with a wide range of existing digital infrastructure, from smartphones to enterprise systems, poses logistical and technical challenges.

Users may face a learning curve adapting to Astra’s advanced features and interaction modes. Ensuring that the assistant is user-friendly and intuitive is essential for widespread adoption.

Adapting Astra for global markets involves accommodating various languages, cultures, and social norms.

Astra must comply with a complex web of international laws and regulations concerning data protection, AI ethics, and technology use, which may vary significantly from one region to another.

Final Thoughts

By merging multimodal inputs with advanced learning capabilities, Astra promises to transform everyday interactions into seamless and intuitive experiences, offering assistance more aligned with human behavior than ever before.

The potential applications of Astra range from enhancing personal convenience in homes to driving efficiencies in professional settings and even improving accessibility for individuals with disabilities.

Its ability to integrate with wearables and smart devices further underscores its potential to become a ubiquitous presence in our lives, making technology more interactive and responsive.

With great power comes great responsibility. Astra’s challenges, including privacy concerns, technical reliability, and ethical implications, pose significant hurdles.

These issues are both technical and moral, requiring careful consideration of how AI is deployed and controlled.

The success of Astra will depend not only on its technological prowess but also on how well it can navigate these complex issues, ensuring that it enhances lives without compromising privacy or security.