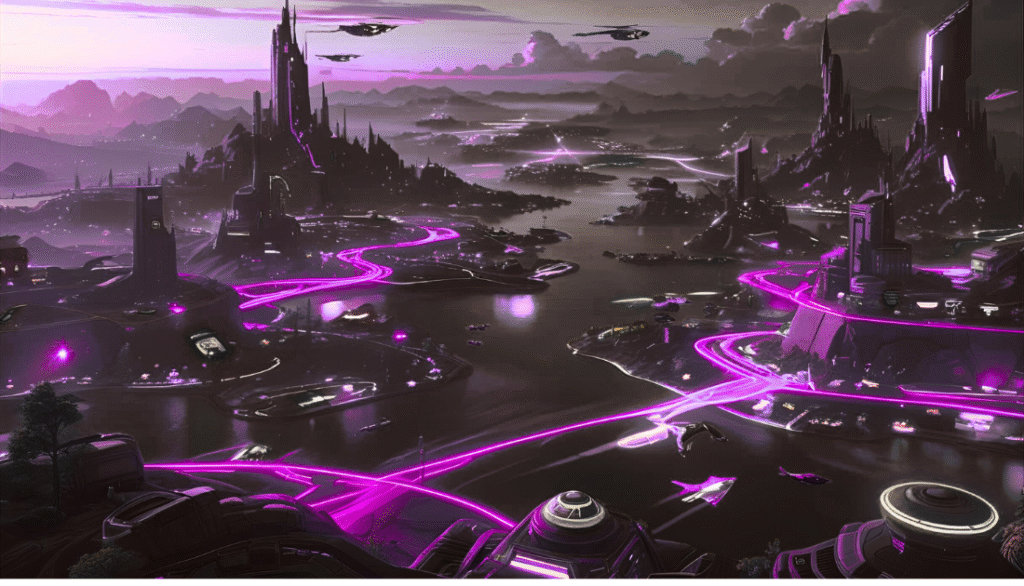

Tencent’s Hunyuan AI team has released Hunyuan3D World Model 1.0, an open-source generative model capable of producing interactive 3D environments from simple text or image prompts.

Highlights

- First of its kind: Open-source model that generates interactive, explorable 3D worlds from text or image prompts.

- Advanced generation pipeline: Combines 360° panoramic image generation with layered 3D mesh reconstruction for immersive environments.

- Explorable environments: Semantic decomposition and mesh layering allow users to move through and interact with generated scenes.

- Voyager module: A diffusion-based extension system generates seamless environment expansions beyond the initial scene.

- Top-tier performance: Outperforms other models in visual realism, alignment, and structural consistency across text-to-world and image-to-world tasks.

- Practical use cases: Ideal for game devs, VR/AR creators, robotics training, and digital content generation.

- Plug-and-play compatibility: Supports export to engines like Unity, Unreal, Blender, and is compatible with models like Stable Diffusion, Kontext, and Hunyuan Image.

- Developer-friendly licensing: Released under a permissive license for commercial and research use, with repositories available on GitHub and Hugging Face.

Now available to the public under a permissive “tencent-hunyuanworld-1.0-community” license, the model supports both academic research and commercial use.

Developers can access the model via Tencent’s GitHub and Hugging Face repositories or use Tencent’s online platform for cloud-based generation.

Capabilities and Approach

Hunyuan3D introduces a novel pipeline that blends panoramic image generation with structured 3D mesh reconstruction, enabling the creation of explorable digital spaces.

The architecture is designed to maintain both visual richness and geometric consistency, offering a more integrated alternative to traditional 3D generation methods, which often focus solely on video frames or mesh rendering.

The model uses a semantically layered 3D mesh system to convert panoramic images into navigable environments. This structured approach allows for realistic depth, foreground interaction, and seamless scene extension — making the worlds not only visual but also explorable.

From Panorama to Interactive Space

Hunyuan3D World Model 1.0 employs a multi-stage architecture.

- Panorama Generation – Utilizes a Panorama DiT diffusion model to generate high-resolution 360° panoramic images from text or visual input, enhanced by circular padding and elevation-aware augmentation to reduce visual artifacts.

- Semantic Decomposition – The panorama is segmented into distinct layers (sky, background, foreground objects), allowing for individual interaction with each element.

- Depth Estimation and Mesh Reconstruction – Layered depth alignment ensures geometric accuracy and proper occlusion. Foreground elements are reconstructed into separate meshes that can be repositioned or animated.

- Scene Expansion with Voyager – A video-diffusion module named Voyager extrapolates the scene beyond the initial frame, generating coherent extended environments for exploration.

Performance Benchmarks

HunyuanWorld 1.0 has demonstrated strong results in benchmark tests against leading open-source panorama and 3D world generation models:

| Task | Metrics | Performance | Competitors |

|---|---|---|---|

| Text to Panorama | NIQE, BRISQUE, CLIP-T, Q-Align | High visual realism and alignment | Diffusion360, PanFusion |

| Image to World | NIQE, CLIP-I, Fidelity & Consistency | Top scores for scene consistency | WonderJourney, DimensionX |

Applications

- Game development

- Virtual and augmented reality

- Robotics and AI simulation training

- Digital content creation workflows

By enabling seamless export of 3D meshes, developers can easily integrate generated content into engines like Unity, Unreal Engine, or Blender.

Compatibility and Flexibility

Although the current version is built on Flux’s image generation backbone, HunyuanWorld 1.0 supports compatibility with other generative models, including Hunyuan Image, Kontext, and Stable Diffusion.

This interoperability allows teams to adapt the model to their existing pipelines without major rework.

Mesh export support further enhances practical usability, giving creators freedom to manipulate scenes and assets in professional 3D workflows.

Industry Reception

Early adopters and developers have noted Hunyuan3D World Model 1.0 as a significant step in the open-source generative space, citing its first-of-its-kind ability to create explorable, interactive 3D environments from natural language or visual cues.

Comments from developer communities point to its utility in simplifying content workflows and lowering the barrier for 3D worldbuilding.

“It creates full panoramic environments, with mesh exports and interactive objects.”

— Reddit user, early tester

“First open-source 3D world generation model with interactive components.”

— Industry observer, GitHub

While an official API has not yet been released, the current open-source version lays the groundwork for future integrations and toolchains.