Meta Platforms Inc. has recently unveiled two monumental advancements in artificial intelligence development: a pair of AI computing clusters, each boasting an impressive array of 24,576 GPUs.

Built on the cutting-edge Grand Teton hardware platform, these clusters represent a significant leap forward in Meta’s capability to train and refine generative AI models, including the much-anticipated next-generation model, Llama 3.

This development marks a pivotal moment for Meta and the broader AI research and development landscape, as it showcases the company’s commitment to pushing the boundaries of what’s possible with AI technology.

These clusters are not merely an upgrade in hardware; they symbolize Meta’s ongoing commitment to innovation and its vision for the future of AI.

By significantly enhancing the computational power at their disposal, Meta aims to accelerate the development of more complex and capable AI systems.

This ambition aligns with the company’s broader goals of achieving artificial general intelligence, a level of AI that closely mimics human cognitive abilities.

Meta’s history of open-sourcing its hardware designs, including contributions to the Open Compute Project, reflects a commitment to transparency and collaboration in the AI field.

This approach benefits Meta and enriches the global AI development community by setting new benchmarks for what is achievable and encouraging a culture of shared progress.

The announcement of these new AI clusters is a testament to the rapid evolution of AI technologies and the increasing importance of computational infrastructure in realizing the next generation of AI capabilities.

As it continues to expand its AI infrastructure, the implications for future AI research, development, and applications are profound, promising new levels of creativity, efficiency, and innovation in the AI domain.

The Grand Teton Hardware Platform

The Grand Teton hardware platform represents a significant milestone in Meta Platforms Inc.’s journey towards advancing artificial intelligence technology.

This state-of-the-art platform is the foundation upon which Meta’s newly unveiled AI computing clusters, each equipped with 24,576 GPUs, are built.

The design and implementation of the Grand Teton platform underscore Meta’s commitment to pushing the boundaries of AI research and development, particularly in generative AI models like the forthcoming Llama 3.

The Grand Teton platform is designed to house thousands of Nvidia Corp.’s H100 GPUs, the latest and most potent GPUs intended for AI and high-performance computing tasks.

This high density of GPUs is a leap from Meta’s previous clusters, which contained around 16,000 Nvidia A100 GPUs, indicating a substantial upgrade in computational capacity.

Grand Teton boasts four times as much host-to-GPU bandwidth as its predecessor, the Zion-EX platform.

This increase in bandwidth, coupled with twice as much compute and data network bandwidth and a doubled power envelope, allows for more efficient data processing and energy utilization, significantly enhancing AI model training and development capabilities.

The platform incorporates Meta’s latest Open Rack power and rack infrastructure architecture. This design offers flexibility for data centre layouts by allowing power shelves installed anywhere within a rack.

Such flexibility facilitates customized server configurations, optimizing throughput capacity per server and reducing the overall rack count.

The Grand Teton platform’s architectural advancements provide Meta with the infrastructure to train more extensive and complex generative AI models.

By increasing their AI clusters’ computational power and efficiency, Meta aims to accelerate the development cycle of AI technologies, paving the way for more sophisticated AI applications.

Meta has a rich history of open-source hardware designs to contribute to the broader AI and tech communities.

Following in the footsteps of previous contributions, such as the ZionEX cluster, the development of the Grand Teton platform and Meta’s open rack design have been shared with the Open Compute Project.

This initiative, founded by Meta in 2011, promotes open innovation and collaboration among tech companies. It allows others to build upon Meta’s advancements and further the collective progress in AI technology.

Design and Architecture

The design and architecture of Meta’s new AI computing clusters are a testament to the company’s innovative approach to advancing artificial intelligence research and development.

Each cluster, powered by the Grand Teton hardware platform, comprises an impressive 24,576 GPUs, reflecting Meta’s commitment to building state-of-the-art computational infrastructure.

These clusters are specifically engineered to support training next-generation generative AI models, including Llama 3, and to facilitate Meta’s ambitious AI research endeavours.

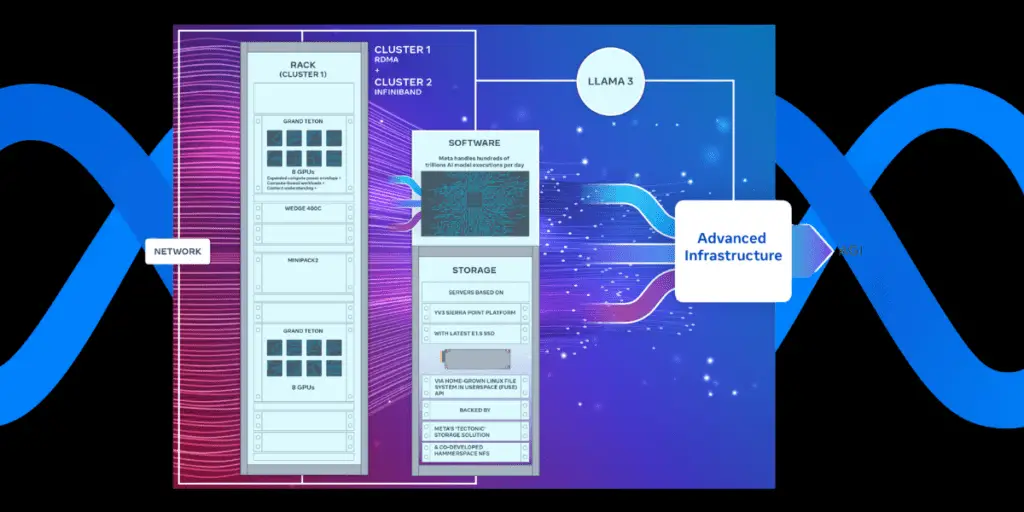

A notable feature of these clusters is their adoption of two distinct networking fabrics, highlighting Meta’s focus on optimizing data flow and communication efficiency across such a vast computational network.

One cluster utilizes Remote Direct Memory Access over Converged Ethernet, which allows for high-throughput and low-latency networking.

This setup is based on Arista Networks Inc.’s Arista 7800 with Wedge400 and Minipack2 OCP rack switches, demonstrating Meta’s commitment to leveraging cutting-edge technology to enhance cluster performance.

The other cluster employs NVIDIA’s Quantum2 InfiniBand, a high-performance networking choice that provides superior scalability and data transfer rates.

This choice reflects Meta’s strategy to experiment with diverse networking solutions to identify the most influential architecture for AI model training and development tasks.

At the heart of these clusters’ storage architecture is Meta’s Tectonic filesystem, a custom-built solution designed to manage the synchronized I/O demands that come with running AI workloads across thousands of GPUs.

The Tectonic filesystem is crucial for handling the massive data flows and checkpointing processes required for training complex AI models, ensuring data integrity and efficient access patterns during the training process.

The clusters are built on the YV3 Sierra Point server platform, tailored to accommodate Meta’s specific requirements for AI workloads. This platform supports Meta’s most advanced E1.S solid-state drives, optimizing storage performance and capacity to meet the demands of large-scale AI model training.

Engineering team has customized the clusters’ network topology and routing architecture to maximize data throughput and minimize latency. Deploying Nvidia’s Collective Communications Library facilitates optimized communication routines for the clusters’ GPUs, enhancing the overall efficiency of distributed AI training processes.

Challenges and Solutions

Developing AI computing clusters of the scale and complexity Meta has embarked upon with its new 24,576 GPU clusters presents many challenges.

The ambition to push the frontiers of generative AI models and research, such as developing Llama 3, necessitates overcoming substantial technical hurdles.

It’s innovative solutions to these challenges highlight the company’s commitment to advancing AI technology and infrastructure.

One of the primary challenges Meta faced was debugging at scale. Traditional debugging tools and techniques often need to be revised when applied to the complexities of distributed computing across thousands of GPUs.

Meta’s solution was to collaborate with Hammerspace to develop interactive debugging tools tailored to their storage system.

Introducing a “distributed collective flight recorder” provided a mechanism for troubleshooting distributed training. It allowed engineers to track and analyze operations across the cluster in real-time.

The initial deployment of the clusters revealed that “out of the box,” intern-node communication did not perform as expected, with bandwidth utilization being extremely variable.

This variability posed a significant problem for the efficient training of AI models, which rely on consistent and high-speed data exchange.

Meta addressed this issue by tuning job schedulers and optimizing network routing within the cluster. These adjustments ensured that bandwidth utilization was consistently greater than 90%, a crucial factor for maintaining high performance in distributed AI training tasks.

Integrating NVIDIA’s H100 GPUs brought about the challenge of fully leveraging their capabilities, particularly the support for 8-bit floating-point operations, which can significantly accelerate AI training.

Meta focused on refining their PyTorch framework implementation to better utilize the cluster hardware. This effort included developing parallelization algorithms and addressing initialization bottlenecks, reducing the initialization time from hours to minutes.

Such improvements enhance the efficiency of AI model training and ensure that the substantial investment in hardware delivers maximum return. Meta’s challenges and solutions in developing these clusters have broader implications for the AI community and industry.

By sharing their experiences and innovations, Meta contributes to a knowledge base that can help other organizations navigate similar challenges.

The company’s commitment to open innovation and collaboration, as evidenced by its contributions to the Open Compute Project, encourages a collective approach to advancing AI technology.

Meta’s AI Infrastructure Roadmap

Recent unveiling of two new AI computing clusters, each equipped with 24,576 GPUs, marks a significant milestone in the company’s AI infrastructure roadmap.

These clusters, built on the advanced Grand Teton hardware platform, are pivotal in Meta’s ongoing efforts to push the boundaries of artificial intelligence, specifically generative AI models such as the upcoming Llama 3.

The creation and deployment of these clusters are just the beginning. Meta’s broader vision encompasses a comprehensive strategy to significantly expand its AI capabilities and infrastructure, setting the stage for ambitious advancements in AI technology.

One of the long-term objectives driving Meta’s infrastructure expansion is the pursuit of artificial general intelligence. AGI represents a form of AI that exhibits human-like intelligence across a wide range of cognitive tasks. This goal has long been the holy grail of AI research.

The computational power and efficiency provided by Meta’s new clusters are critical in advancing towards this goal. They offer the necessary resources to train more complex and sophisticated AI models that can learn, reason, and interact in ways akin to human intelligence.

Meta’s announcement highlights an ambitious plan to continue growing its AI infrastructure at an unprecedented scale. By the end of 2024, Meta aims to incorporate 350,000 NVIDIA H100 GPUs into its infrastructure, which will significantly amplify its computing power.

This expansion is not just about increasing the number of GPUs; it’s about creating a computational ecosystem capable of supporting the next wave of AI innovations.

Envisions a portfolio featuring compute power equivalent to nearly 600,000 H100s, underscoring the company’s commitment to leading the charge in AI development.

A key component of Meta’s strategy is its dedication to open innovation and collaboration in AI technology development.

The company has a history of open-sourcing its hardware designs, including its contributions to the Open Compute Project, founded in 2011. This commitment to openness extends to its recent activities within the AI Alliance, launched in collaboration with IBM.

The alliance aims to foster an open ecosystem that enhances transparency and trust in AI, promotes shared advancements, and ensures that the benefits of AI technologies are accessible to a broad community.

The company has encountered and addressed issues related to debugging and optimizing performance across thousands of GPUs.

Meta is working to build interactive debugging tools, enhance network routing, and optimize job schedulers, which are part of its ongoing work to ensure that its AI clusters operate at peak efficiency.

These efforts demonstrate Meta’s proactive approach to overcoming technical hurdles, ensuring its infrastructure can support the demanding requirements of advanced AI model training.

Final Thoughts

The unveiling of Meta’s two new AI computing clusters, each equipped with a staggering 24,576 GPUs, is a monumental step in the evolution of artificial intelligence technology and infrastructure.

Built on the innovative Grand Teton hardware platform, these clusters showcase Meta’s engineering prowess and clearly signal the company’s ambitious vision for the future of AI.

As Meta delves deeper into the realms of generative AI with the development of models like Llama 3 and sets its sights on the elusive goal of artificial general intelligence, the significance of this advancement cannot be overstated.

It approach, blending cutting-edge technology with a commitment to open innovation and collaboration, exemplifies a forward-thinking strategy in the AI landscape.

By planning to expand its AI infrastructure to include 350,000 NVIDIA H100 GPUs, Meta is not just preparing for the future; it is actively shaping it.

This expansion, aimed at achieving compute power equivalent to nearly 600,000 H100s, underscores the scale of Meta’s ambition and its dedication to pushing the boundaries of what AI can achieve.

The challenges faced and overcome during the development of these clusters—ranging from debugging at scale to optimizing network performance—highlight the complex technical hurdles accompanying such groundbreaking work.

Yet, Meta’s proactive solutions and collaborative efforts, particularly within frameworks like the AI Alliance, demonstrate a commitment to advancing its own capabilities and contributing to the broader AI community.

Meta’s new AI computing clusters represent a significant milestone in the journey towards more advanced, efficient, and potentially transformative AI technologies.

As the company continues to expand its infrastructure and refine its AI models, the implications for future AI research, development, and application are profound.

Vision for AI, characterized by ambitious goals and a collaborative spirit, offers a glimpse into a future where AI’s potential is fully realized, benefiting industries, societies, and individuals worldwide.