Tailored specifically for the Apple Vision Pro, visionOS offers a unique environment where developers can create immersive, spatial computing experiences.

This platform blends familiar frameworks and tools with groundbreaking features, enabling developers to craft applications that leverage depth, space, and enhanced interactivity.

As we delve into the world of visions, we will explore its new capabilities, robust APIs, and the creative possibilities it brings to the forefront of digital innovation.

What’s New in visionOS

One of the key innovations is the Volumetric APIs, which enable the creation of richer spatial experiences by fully utilizing depth and space.

These APIs allow for dynamic resizing of 3D objects using the SwiftUI scene modifier window Resizability.

Developers can choose to have volumes maintain a constant size or scale dynamically, and they can now affix ornaments to volumes, adding another layer of interactivity.

Another exciting addition is TabletopKit, a new framework designed for creating collaborative experiences centred around a table.

This framework simplifies the manipulation of cards and pieces, the establishment of placements and layouts, and the definition of game boards, making it easier to develop engaging and interactive tabletop games.

visionOS also enhances enterprise solutions with new APIs that offer greater sensor access and control.

These APIs provide access to the primary camera, spatial barcode and QR code scanning, and the Apple Neural Engine, allowing developers to create more powerful and sophisticated applications.

Updates to inputs on Apple Vision Pro offer developers the flexibility to decide whether the user’s hands should appear in front of or behind the digital content. This allows for more intuitive and natural interactions within the app.

The capabilities for scene understanding have also been significantly extended. Planes can now be detected in all orientations, allowing objects to be anchored on various surfaces within the user’s environment.

Room Anchors consider the user’s surroundings on a per-room basis, providing a more contextually aware experience.

The new Object Tracking API enables content to be attached to individual objects around the user, enhancing the interactivity and realism of the app.

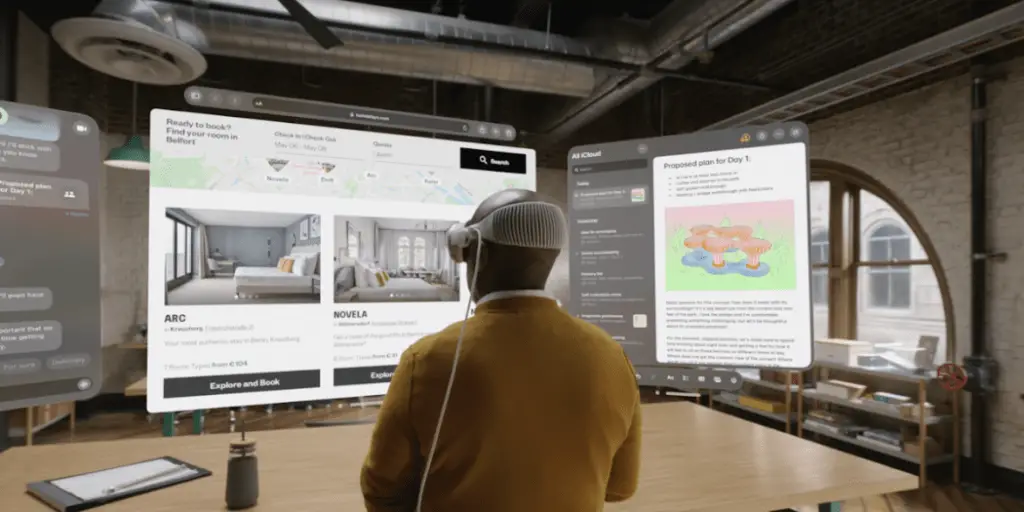

VisionOS offers a spectrum of immersion, providing an infinite spatial canvas for users to explore, experiment, and play. Developers have the freedom to create experiences that are either connected to the user’s surroundings or completely immersive.

Apps can start in a window, incorporate 3D content, transition to a fully immersive scene, and seamlessly return, offering a fluid and dynamic user experience.

Enhanced Inputs and Features

One of the critical advancements is the update to input options on Apple Vision Pro, where developers can now control whether a user’s hands appear in front of or behind the digital content.

This flexibility allows for more natural and personalized interactions, enhancing the overall user experience.

VisionOS has extended its capabilities for scene understanding. Now, planes can be detected in all orientations, enabling objects to be anchored on various surfaces around the user.

This improvement allows for more accurate and stable placement of digital content in the real world. The introduction of Room Anchors means that the user’s surroundings are considered on a per-room basis, making the spatial interactions more contextually aware and tailored to the environment.

Another notable feature is the new Object Tracking API, which lets developers attach content to individual objects in the user’s surroundings.

This capability opens up new possibilities for interactive and dynamic applications, where digital content can respond and adapt to real-world objects and movements.

visionOS also emphasizes creating immersive and fluid user experiences. With the ability to seamlessly transition between different levels of immersion, apps can start in a simple window, incorporate 3D content, move into a fully immersive scene, and quickly return to a non-immersive state.

This fluidity allows developers to design experiences that can adapt to the user’s needs and preferences, providing a more engaging and flexible interaction with the app.

Immersive Experiences

visionOS improve the way immersive experiences are created and enjoyed, offering developers and users an unparalleled spatial computing environment.

At the heart of this innovation is the concept of a spatial canvas, which provides infinite possibilities for exploration, experimentation, and play.

This canvas allows developers to rethink and redesign their applications, transitioning seamlessly from traditional 2D interfaces to fully immersive 3D environments.

The flexibility of visionOs lies in its ability to support different levels of immersion. By default, apps launch into the Shared Space, where they coexist alongside other applications, much like multiple windows on a Mac desktop.

In this space, users can interact with various apps simultaneously, repositioning and resizing windows and volumes to suit their preferences.

For a more immersive experience, apps can open into a dedicated Full Space. In this environment, only the app’s content is visible, allowing for a deeper engagement with the digital experience.

Within a Full Space, developers can create unbounded 3D content, open portals to different worlds, or fully immerse users in a new environment.

This flexibility ensures that apps can offer both connected and fully immersive experiences, depending on the user’s needs and the app’s purpose.

The seamless transition between different levels of immersion is a crucial feature of visionOs. Developers can design experiences that start in a windowed mode, bring in 3D content as needed, transition to a fully immersive scene, and return to the original state effortlessly.

This fluidity allows users to interact with their surroundings while engaging with digital content, enhancing both productivity and entertainment.

VisionOS’s ability to create truly immersive experiences is further supported by its integration with Apple’s powerful frameworks.

SwiftUI, RealityKit, and ARKit work together to provide developers with the tools needed to build sophisticated and responsive 3D interfaces.

These frameworks support advanced features like depth, gestures, and immersive scene types, making it easier to create applications that are not only visually stunning but also highly interactive.

Core Components of VisionOs

visionOS is built on several core components that empower developers to create innovative and immersive applications. Central to this is the concept of Windows, which is crafted using SwiftUI.

These windows can contain traditional views and controls. Still, visionOS takes it a step further by allowing developers to add depth through 3D content. This integration enables more dynamic and engaging interfaces that can be interacted with from various angles.

Volumes are another essential aspect, introducing 3D scenes that can be viewed from any perspective within the Shared Space or an app’s Full Space.

By using RealityKit or Unity, developers can showcase 3D content that significantly enhances the user experience, making interactions more lifelike and interactive.

Spaces in visionOS play a crucial role in organizing and presenting content. Apps launch into the Shared Space by default, allowing them to coexist alongside other applications in a manner similar to multiple apps on a desktop.

This setup provides users with the flexibility to reposition windows and volumes as needed. For a more immersive experience, apps can transition into a Full Space.

In this dedicated environment, only the app’s content is visible, enabling developers to create unbounded 3D experiences, open portals to new worlds, or fully immerse users in a custom environment.

The seamless integration of these core components with Apple’s robust frameworks further enhances the development process.

SwiftUI is the primary tool for building visionOS apps, offering extensive capabilities for creating 3D interfaces, managing depth, and incorporating immersive scene types.

It works harmoniously with RealityKit, Apple’s 3D rendering engine. It supports realistic lighting, shadows, and visual effects, making the digital content appear more natural and engaging.

RealityKit’s adoption of MaterialX for material authoring ensures high-quality visual representations that meet industry standards.

ARKit on visionOS extends the platform’s capabilities by providing an in-depth understanding of the user’s surroundings. This allows for advanced interactions between digital content and the physical world.

Features like Plane Estimation, Scene Reconstruction, Image Anchoring, World Tracking, and Skeletal Hand Tracking enable developers to create applications that blend the real and digital worlds seamlessly, enhancing the user’s overall experience.

visionOS also prioritizes accessibility, ensuring that apps are usable by everyone. Features such as eye and voice control, as well as alternative navigation methods like Pointer Control, allow for diverse ways of interacting with content.

Accessibility Features

One of the features is the ability for users to control their devices entirely with their eyes or voice. This allows for hands-free navigation and interaction, making the technology accessible to those with mobility impairments or other disabilities that make traditional input methods challenging.

In addition to eye and voice control, visionOS introduces Pointer Control, which offers alternative navigation options.

Users can select their index finger, wrist, or head as an alternative pointer, providing flexibility and adaptability in how they interact with digital content.

This feature caters to a wide range of user preferences and needs, ensuring that everyone can find a method that works best for them.

Developers can create accessible apps for visionOS using the same techniques and tools they are already familiar with on other Apple platforms.

This includes leveraging SwiftUI and other Apple frameworks to build interfaces that are not only visually appealing but also usable by people with various disabilities.

By incorporating features like adjustable text sizes, VoiceOver support, and high-contrast modes, developers can ensure their apps provide an excellent experience for all users.

VisionOS’s commitment to accessibility extends to its immersive and spatial capabilities. For instance, users who rely on voice commands or eye tracking can still fully engage with 3D environments and augmented reality experiences.

The system is designed to be responsive to these input methods, allowing users to navigate and interact with complex spatial content just as quickly as they would with more conventional interfaces.

visionOS represents a significant leap forward in spatial computing, offering developers a robust platform to create immersive and interactive applications.

With advanced features like Volumetric APIs, TabletopKit, and enhanced inputs, along with powerful development tools and accessibility options, visionOS enables a new era of app development.